“The Difference Is – Now The Whole World Is Paying Attention To AI,” Says Prof. Serge Belongie

/Interview with Serge Belongie, a professor of Computer Science at the University of Copenhagen, the head of the Danish Pioneer Centre for Artificial Intelligence and LDV Capital Expert in Residence.

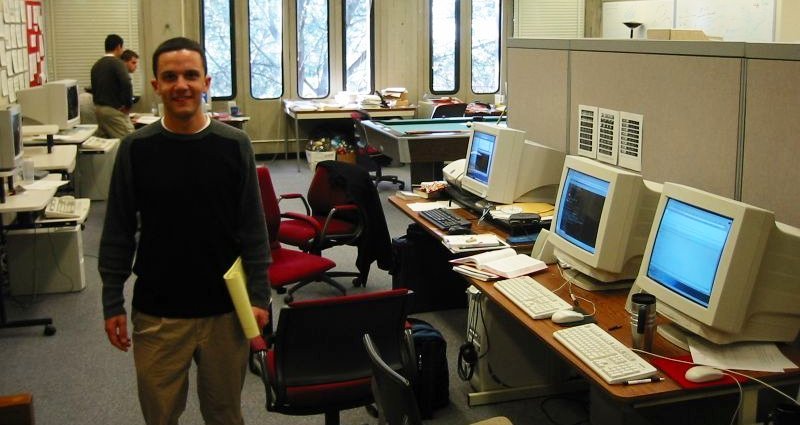

In 1995, Serge Belongie earned a B.S. (with honor) in EE from Caltech, followed by a Ph.D. in EECS from Berkeley in 2000. From 2001-2013, he served as a professor in the Department of Computer Science and Engineering at the University of California, San Diego.

Serge co-founded Digital Persona, the world’s first mass-market fingerprint identification device, Anchovi Labs, a platform to organize, browse and share personal photos (acquired by Dropbox), and Orpix, an image recognition framework that supports various application domains.

The MIT Technology Review named Belongie to their list of Innovators under 35 in 2004. In 2007, Serge and his co-authors received a Marr Prize Honorable mention for a paper presented at the International Conference on Computer Vision. In 2015, he was the recipient of the ICCV Helmholtz Prize, awarded to authors of papers that have made fundamental contributions to the field of computer vision. He has also been honored with the NSF CAREER Award and the Alfred P. Sloan Research Fellowship.

LDV Capital’s Founder and General Partner Evan Nisselson crossed paths with Serge in March 2013. Since then, he has been inspired by his insights, expertise, and passion for helping people succeed. They have been working closely together on our LDV Vision Summit since 2014. Serge became our very first Expert in Residence back in 2016.

As we approach the 10th Annual LDV Vision Summit, we thought it fitting to pose a few burning questions to Serge. We delved into his experiences as a prominent figure in AI and sought his insights on the present landscape of visual technologies. Additionally, we explored his thoughts on the prospects available to researchers aiming to bring their work to market and impact humanity at large.

Kat: What is the driving force behind your passion for computer vision and AI research?

Serge: For as long as I can remember, I’ve been interested in patterns and categorizing things. In junior high, I did a class project on taxonomizing screws, bolts, and other fasteners. By the time I started college, I was interested in patterns in audio, e.g., bioacoustics, such as the vocalizations of birds or whales. When it came to images, I was drawn to fingerprints and human faces. I worked on lip reading from video and I found everything about that problem fascinating: the fusion of the audio and the visual, the variation between speakers, and the computational challenges. At the time (the early 90s), digital cameras were emerging onto the scene, but they lacked any sort of computational understanding. Today you take it for granted that you'll see little detection boxes around the faces in the viewfinder, or that you'll have smart photo album organization software that knows your whole family. But back then, none of that existed.

I had the sense there would be a huge demand for this kind of technology, and I also loved the math behind it. I wasn't interested in majoring in math or physics, but I loved the techniques that those fields used. You could apply them to sound, video, and images, and use advanced applied math methods in that context to solve deeply interesting problems. Beyond that, I can't really justify the choice because I feel like, somehow, I was put on this Earth to do this.

Kat: Your lab has moved from Cornell Tech to the Pioneer Centre for AI at the University of Copenhagen. What led to this move? Why Denmark?

Serge: As part of my group’s work on Visipedia (capturing and sharing visual expertise), I got to know about the Global Biodiversity Information Facility (GBIF), which is headquartered in Copenhagen. I visited there several times in the context of expanding my group’s work beyond bird recognition into tens of thousands of other species of plants, animals, and fungi. That led to me learning about the AI-related research activity taking shape in Denmark, which in turn led to a summer visit, and eventually, my joining the University of Copenhagen full-time. (It also just so happens that my wife is Danish.)

Kat: What do you feel are the biggest changes between your research 30 years ago at Cornell University to today?

Serge: The biggest difference between then and now is that now the whole world is paying attention to AI. The choices we make as researchers go far beyond dusty conference proceedings, reaching into the lives of real human beings, when they apply for bank loans, get medical advice, and so on. This means that AI researchers today cannot turn a blind eye to the human impacts of their work.

Kat: How have your teaching approaches evolved over the years?

Serge: If I could point to one concrete change, it’s the use of Jupyter notebooks, e.g., in Google Colab. In classes involving coding, just getting the development environment in place for a class of 100+ students was a massive headache. Now you can prepare interactive exercises right in the browser — the barriers of entry have virtually disappeared. Moreover, Large Language Model (LLM)-based software like ChatGPT and Co-Pilot allows students to write code faster and avoid syntax errors. Another change that comes to mind is the way I work YouTube videos into my teaching plan. Sometimes a video (e.g., by 3Blue1Brown) is so well made that there’s nothing I could do in a live lecture to top it.

Kat: You co-founded several computer vision startups and one of them has been acquired. What is the most significant lesson you gained from this experience?

Serge: The companies I co-founded were all from the times before the flood of Deep Learning papers. (I call that period “antedeepluvian.”) As such, there is little in the way of methodology I can carry forward into the present. After Deep Learning exploded onto the scene, I continued my entrepreneurial engagements as an advisor to several companies.

What is evident to me, looking back on this experience, is that academia and entrepreneurship need one another. They have complementary strengths and occasionally engage in frenemy behavior, but the movement of people and ideas between academia and industry — starting from the once humble industry internship — has given rise to some incredible advances in AI.

Kat: What is the most critical advice you give to your Ph.D. students if they wish to commercialize their research?

Serge: They should talk to LDV Capital! Ok, that’s oversimplifying things, but a great starting point would be to watch the Entrepreneurial Computer Vision Challenge (ECVC) pitches from past LDV Vision summits. These are real-life Ph.D. students — not MBA types — who had very cool ideas and thought about taking the plunge into commercialization. One example is GrokStyle, which was acquired by Facebook/Meta.

The ECVCs are an excellent window into the transition one must make from “cool science projects” into products that address a burning need.

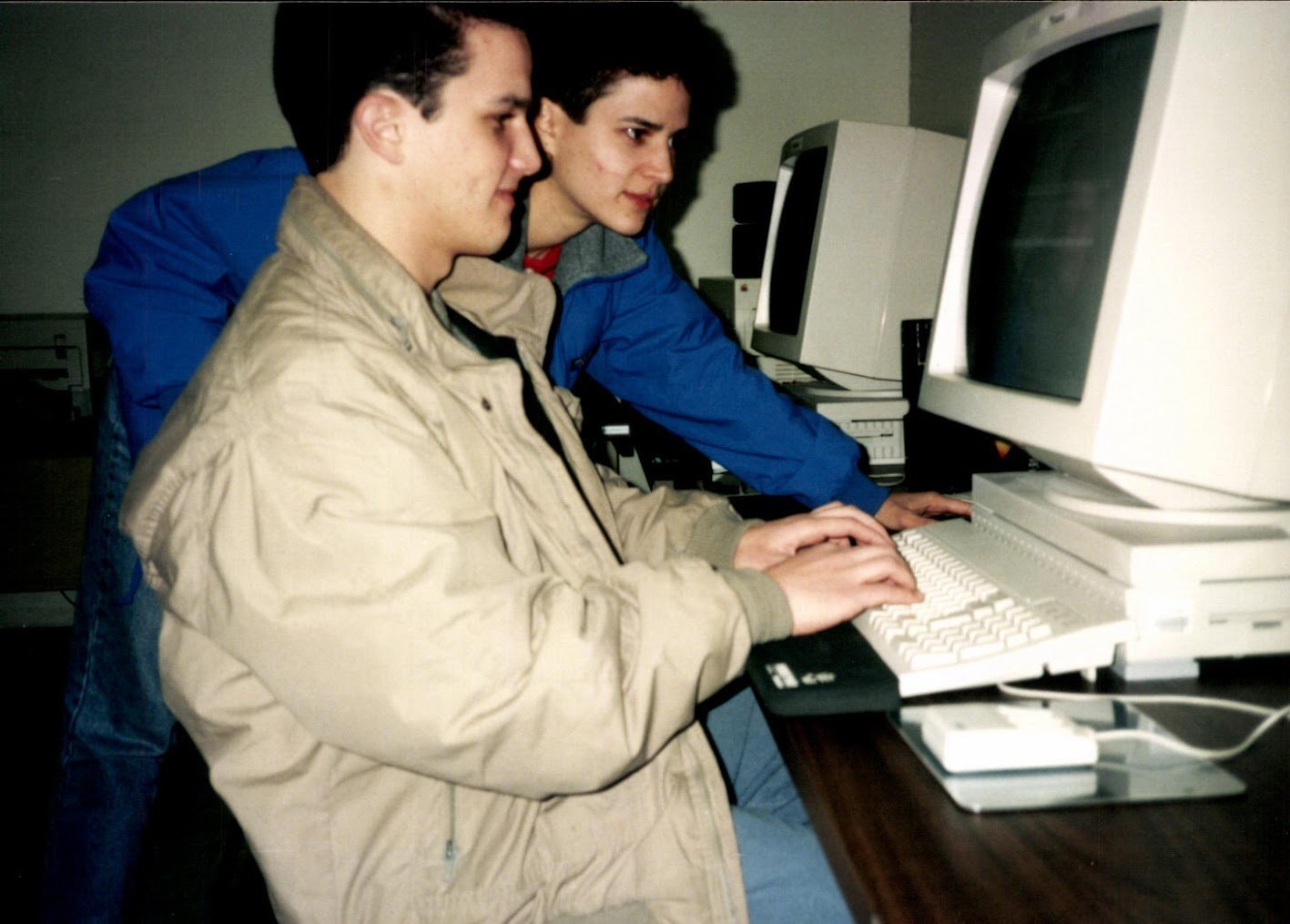

Evan, Serge and Sean Bell, Co-Founder & CEO at Grokstyle – a company that developed an artificial intelligence technology that helps people shop online – at our 3rd Annual LDV Vision Summit in 2016. Check out our interview with the company’s co-founder Dr. Kavita Bala, “Bridging the Gap Between Academia and Industry”.

©Robert Wright

Kat: Can you tell us about the most exciting projects currently happening in your Belongie Lab?

Serge: Some of our projects are bringing Visipedia (the project mentioned above) into the era of Generative AI. In these settings, instead of asking the machine, “What is the thing in this image?” we can instead have a conversation, or chat, with the image, about all of its attributes, history, connection to other organisms, or cultural significance. Such technology is beginning to surface in products like ChatGPT, but they often lack an understanding of the long tail of fine-grained concepts of interest to so many groups of enthusiasts, such as birdwatchers or numismatists. Our ongoing efforts infuse this kind of specialized knowledge into a human-in-the-loop system.

Another project we have in the works has the working title “Global Narrative Information Facility (GNIF),” in analogy to the above-mentioned GBIF. This is part of one of our centre’s moonshots about stopping the spread of misinformation. Think of GNIF as a TV Tropes for the real world, or a collection of Periodic Tables of Storytelling beyond the scope of entertainment. This effort is complementary to fact-checking systems; it does not focus on right and wrong. Since misinformation campaigns often leverage people’s preferred narratives, we are interested in identifying how artifacts from social media – a tweet, a Facebook comment, a meme – resonate across a large reference set of evolving human narratives. We envision that this can provide a vital substrate for understanding and predicting the behavior of both humans and bots.

Kat: What advice would you give to aspiring AI researchers and students interested in joining your lab?

Serge: I admit Ph.D. students through the ELLIS PhD portal, so I’d start by pointing them to that resource. ELLIS is a pan-European network of AI research labs, and it features a Ph.D. meta-program that connects students with an advisor and co-advisor in two different countries. Prospective postdocs should check the Pioneer Centre for AI’s jobs page for opportunities. Apart from that, I would say I’m always interested in exploring new directions involving fine-grained analysis of multimodal data, 2D/3D generative models, misinformation detection, and self-supervised learning.

Kat: What do you see as the most significant challenges in AI research today?

Serge: I feel strongly that the spread of misinformation is one of the most significant challenges we face today, but that, of course, goes well beyond the scope of AI research. Within AI research itself, I think one of the most significant challenges is tackling the fine-grained, long-tailed nature of the things of interest to all of humanity. AI advances often start by swinging for the fences in the most general way possible, but such an approach tends to leave special interest groups in the cold. The history of the internet, with its thriving online communities in every subtopic you can imagine, shows us that people don’t want AI gimmicks; they want AI that works for them. To achieve that goal, I feel strongly that we need to develop AI approaches that work hand-in-hand with human communities.

Kat: We hear through the grapevine that you’re not a fan of the term Foundation Model. What’s your issue with it?

Serge: Naming things is a big deal. It’s a form of capture, for better or worse. When it comes to naming in academia, I’m partial to unpretentious, “exactly what it says on the tin” names, like VLSI or RAID. In that vein, I preferred Tom Dietterich's suggestion of "Large Self-Supervised Models (LSSMs)." Or if it’s a big model using visual and language features, can’t we just call it an LVLM?

Imagine if a certain EE/CS department in the 80s decided to name VLSI technology “Singularity Chips,” or to rebrand RAID as “Rapture Arrays.” That would be stupid.

In the setting of industry consortia, I understand the names will be more colorful/evocative, and for that reason, I have no beef with the term “Frontier Model”. In the end, the important thing is that young researchers have unambiguous terms to refer to key concepts in the field, and if that generation is ok with using the F-word for these models, and they want to cite that one paper from Stanford, so be it.

Kat: What inspired the creation of the Pioneer Centre for AI, and what gap in AI research does it aim to address?

Serge: The Pioneer Centre concept is an ambitious initiative launched by the Danish National Research Foundation, with the support of several private foundations. The aim of the centre is to attract top researchers from around the world and to carry out basic research aimed at high-risk, high-reward, transformative solutions to major societal challenges. Ours is the first Pioneer Centre, which is how we got our nickname P1. Since then, the second and third Pioneer Centres have been established, one on sustainable agriculture and the other on power-to-X materials discovery.

Kat: Can you share some of the key research areas and initiatives currently underway at the Centre?

Serge: Just recently we held a Moonshot Workshop at the centre, wherein our co-leads and students co-created a set of grand challenges that characterize our values and visions within the AI landscape. One of our moonshots is in the CareTech space, combining human and machine abilities to deliver world-class care to the elderly population, drawing upon AI, AR/VR, and Denmark’s home-nurse infrastructure. This project is emblematic of P1 initiatives, in that it combines cutting-edge research themes with unique Nordic advantages, in this case, functioning social systems and a relatively high level of trust in institutions.

Kat: Your foresight about augmented reality emerging as the most impactful technology sector in the next decade was astute. What do you think are the critical factors that have contributed to Apple's success in the augmented reality space, and how might this impact the broader tech industry's trajectory over the next few years?

Serge: Apple is famously secretive about their development processes, so I can only make wild guesses, but I think they quietly studied Hololens, Magic Leap, and Meta Quest 1/2/3 over the past decade, along with the relevant academic literature (see for example Ken Pfeuffer’s work on Gaze+Pinch), and did their Apple thing by identifying the best features across that entire landscape.

Kat: Which initiatives in the field of visual tech and AI inspire you the most?

Serge: LLMs with RLHF such as ChatGPT and generative image models such as Latent Diffusion from Björn Ommer’s group are two recent advances that I find particularly inspiring.

Kat: What recent advancements in visual technologies do you believe have the most profound implications for the future?

Serge: There’s a growing trend of researchers focusing on multimodal data, i.e., combining images, text, and audio, in shared model architectures (such as transformers) to solve messy, real-world problems. I think that trend is here to stay, and the implication is that new students in AI will find it completely natural to cultivate multiple specializations, as opposed to diving deep only in one area, such as Natural Language Processing or Computer Vision.

Kat: What industries do you think are going to be reshaped by visual technologies in the next 10 years and then 20 years?

Serge: I foresee that medicine will be completely revolutionized by visual tech in the coming decades.

Claims that certain physicians will be completely replaced by AI are overblown, in my opinion, but I do think that AI “co-pilots” in areas such as radiology, dermatology, and histopathology will be commonplace, to the benefit of everyone.

On a contrarian note, I don’t think advances in visual tech (or AI in general) will bring us the self-driving car future we were promised around 2010. The only way I envision self-driving cars becoming the norm is if governments restrict the use of conventional cars to special lanes, or outlaw them altogether. At least in the case of the US, I don’t see that happening. Ever.

Kat: You’ve played a pivotal role in our flagship event, the Annual LDV Vision Summit, since its inception in 2014. What made you believe that the industry and the world need an event focused on the forefront of visual technologies?

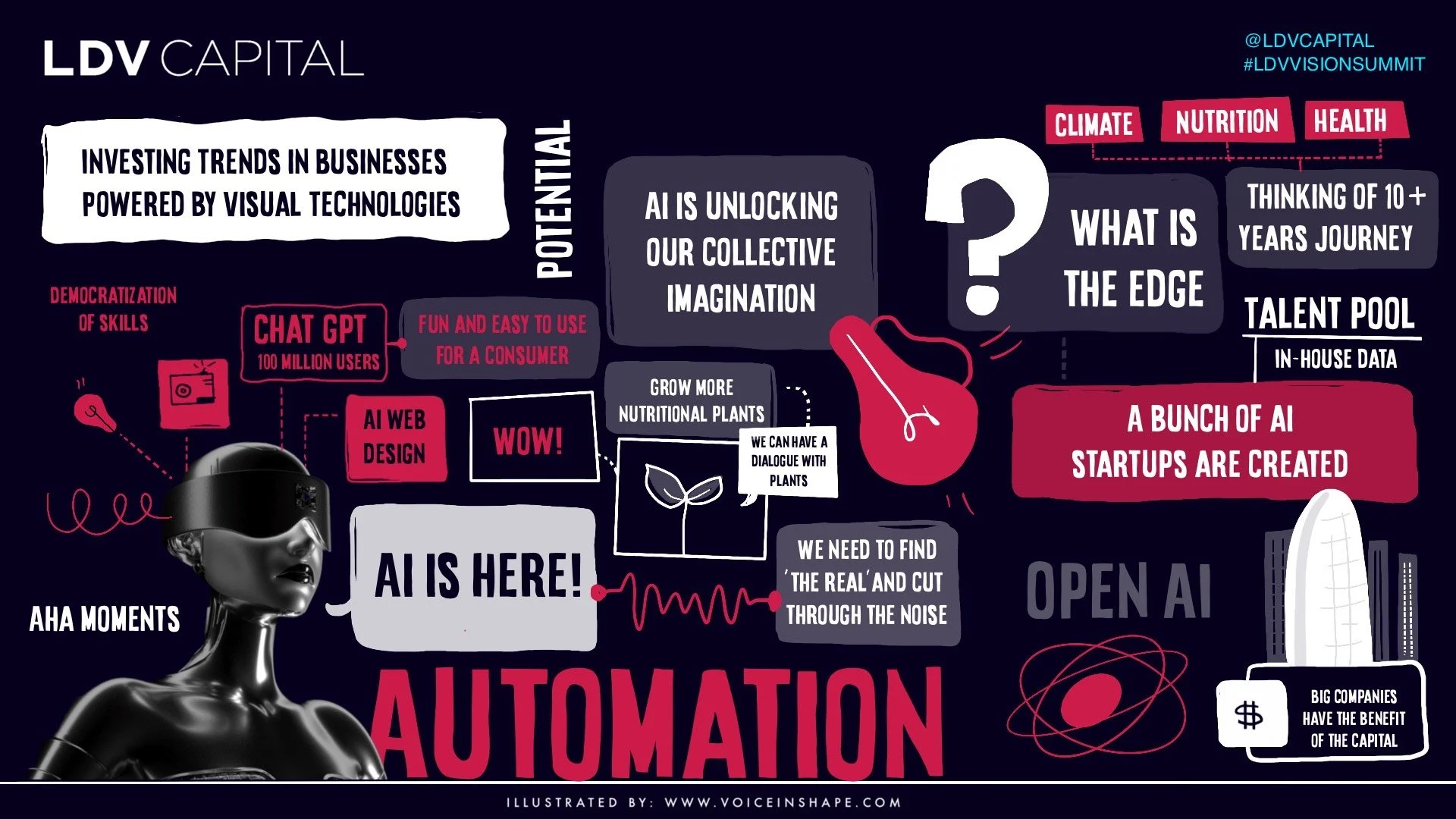

Serge: The Deep Learning revolution had just arrived, and its initial impact was visually focused, with deep ConvNets taking the top spot in the ImageNet challenge by a wide margin. Evan and I made an educated guess that visual tech would experience a Cambrian explosion of entrepreneurial activity and that the NYC tech ecosystem could benefit from a summit focused on those opportunities.

Kat: In 2015, you highlighted computer vision applications in citizen science. Considering the advancements in computer vision over the past few years, how has its integration in citizen science initiatives evolved, and what potential impacts has it brought to the field?

Serge: Apps such as iNaturalist and Merlin Bird ID have surged in popularity since then, accumulating hundreds of millions of observations of plants, animals, fungi, and other organisms worldwide, with thousands of active users every day. This data has been instrumental in a number of modern climate change studies, for which species distribution models are essential to test hypotheses.

Kat: It’s been a decade since we started, and our summit has hosted numerous brilliant speakers. Who left the most profound inspiration on you?

Serge: One event that stands out in my mind is Timnit Gebru’s presentation “Predicting Demographics Using 50 Million Google Street View Images” in 2017. The collision of deep learning and patterns of human behavior evident in her studies foreshadowed what would become a tsunami of public discussion on the pros and cons of AI for humanity.

I’m particularly grateful for some of the friends I’ve made through the summit. One is Harald Haraldsson, a visual artist and software engineer who later became director of Cornell’s Extended Reality Collaboratory. Another is Mikkel Thagaard, a business strategist who, years later, became instrumental in connecting me to Denmark’s startup scene.

Kat: We're excited to announce that our upcoming 10th summit is scheduled for March 21, 2024. What are you looking forward to the most at our 10th Annual LDV Vision Summit?

Serge: I’m most interested in hearing about advances in Healthcare & Neurotech. We’re going to hear about some amazing new technologies in those areas.