Gardin’s Optics-Powered AI Agriculture Platform Increases Greenhouse ROI Across Customers

/We are pleased to share an interview with Sumanta Talukdar, CEO & Founder at Gardin and Evan Nisselson.

1. What is Gardin’s mission?

Agriculture is the only foundational industry that doesn’t measure its product accurately and at scale. This has kept a $1T digitization opportunity virtually untapped. Gardin’s mission is to unlock this opportunity by enabling food producers, for the first time, to measure the biology of their crops in real-time and effectively at scale.

2. How is Gardin’s optical phenotyping stack differentiated from alternative sensing or analytics solutions in precision agriculture today?

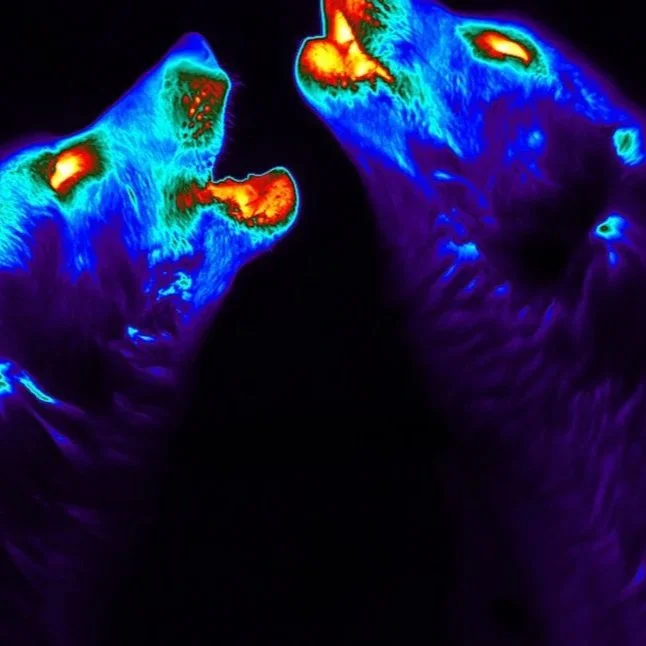

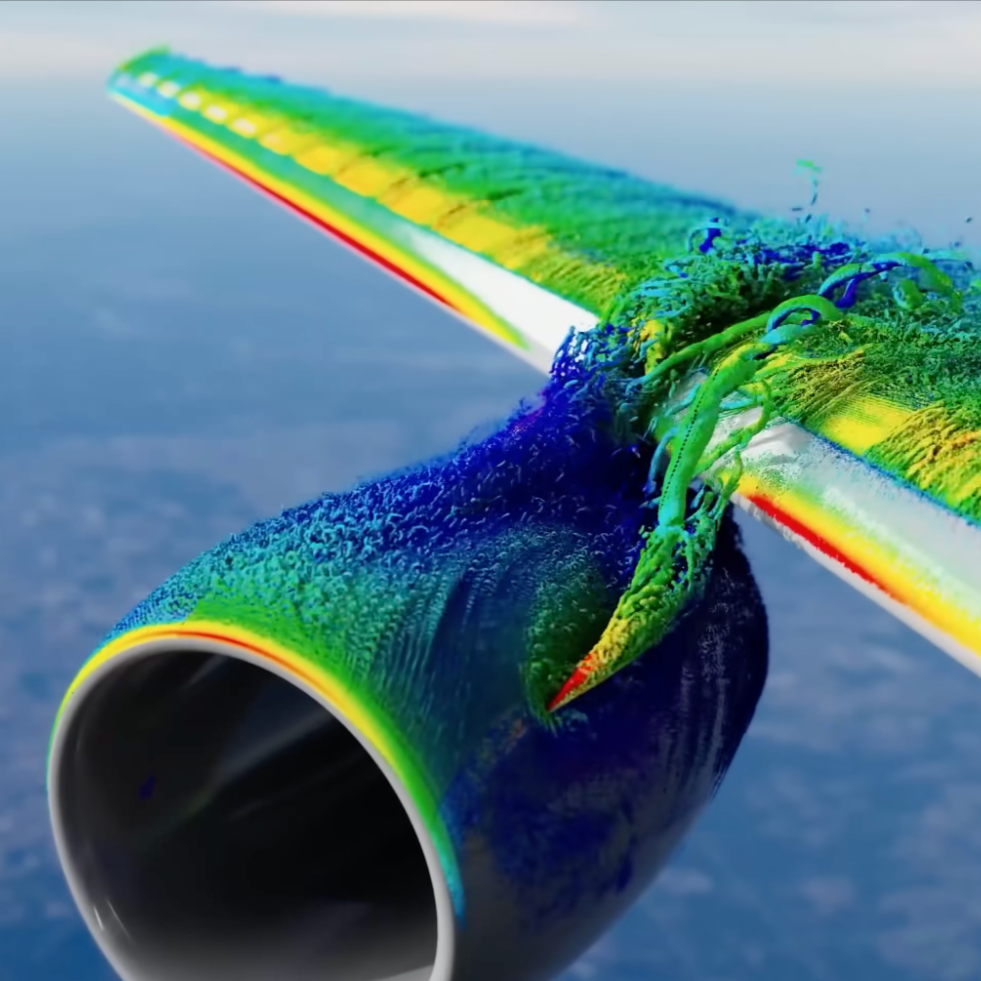

Gardin is the only solution that delivers on 4 critical requirements simultaneously to greenhouse growers. First, our multimodal robotic optical sensors measure crop photosynthesis in real time and at scale. Second, our Gardin AI brain transforms raw biological signals into clear, actionable insights for growers. Third, the entire platform operates fully autonomously, removing manual data collection and interpretation. Fourth, Gardin is delivered through a scalable SaaS model, aligning our success with our customers’ outcomes.

Read More