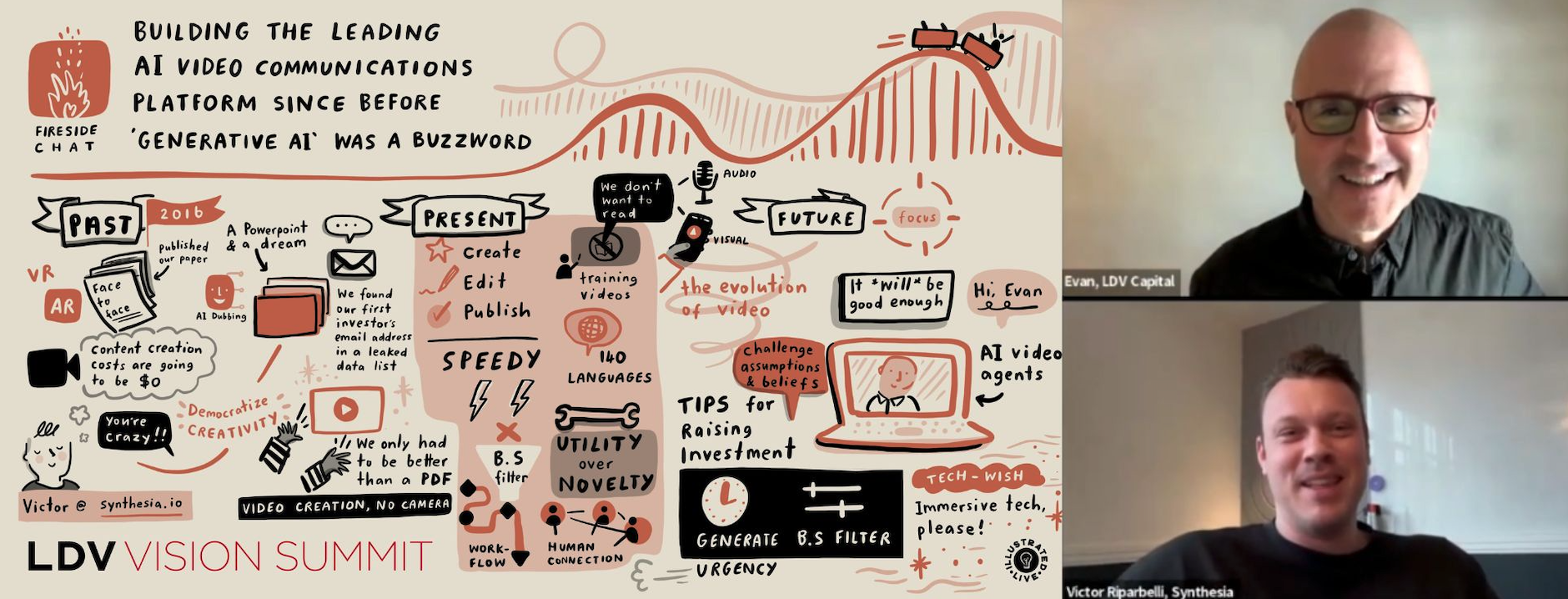

Building the Leading AI Video Communications Platform For Business Since Before 'Generative AI' Was a Buzzword

/Fireside chat between Evan Nisselson and Victor Riparbelli, Co-Founder & CEO at Synthesia: a Founder’s Roller Coaster Stories From Pre AI Video Hype & Into The Future

Victor Riparbelli, Co-Founder and CEO at Synthesia

Evan Nisselson, Founder and General Partner at LDV Capital, first met Victor Riparbelli, Co-Founder & CEO at Synthesia, in November 2018 and shared his team’s vision to empower anyone to create video content without cameras or studios. In 2019, LDV Capital led their $3.1M Seed financing. A few months later, one of Synthesia’s first AI avatars premiered at our 6th Annual LDV Vision Summit. At the time, the avatars had limited emotional expressiveness, but that’s no longer the case! Synthesia’s AI-generated videos are now transforming how companies communicate with customers, employees and beyond. Just as personalized newsletters became common, personalized videos from banks, insurance companies and more are delivering exponential value.

In January 2025, the company announced their $180 million in a Series D round led by NEA, with participation from WiL, Atlassian Ventures, PSP Growth and existing investors. Adobe Ventures made a strategic investment in April 2025. That same month, the company surpassed $100 million in annual recurring revenue. Synthesia is now the UK’s most valuable generative AI media company and the worldwide market leader in enterprise AI video.

Most recently, the team announced Synthesia 3.0, the next era of video powered by video agents that bring a level of two-way interactivity resembling a live conversation. These agents can run training sessions, screen job candidates and guide customers through learning experiences.

Evan and Victor discussed Victor’s journey, Synthesia’s rapid growth and the future potential of AI video, including the rise of video agents – the next big shift toward making videos interactive and personalized, evolving the medium beyond a one-way broadcasting format.

Check out the recording from our 11th Annual LDV Vision Summit or read our shortened and lightly edited transcript below.

Evan: What sparked the idea to start Synthesia and how did you and your co-founders come together?

Victor: The story begins in 2016. After working in the Danish startup ecosystem and doing a bunch of smaller projects, I moved to London. I wanted to build a company. I knew I loved building products, but I’d also learned that I wasn’t super excited about building accounting programs and business processes. I love science fiction, frontier technology and the creative arts. I wanted to do something that could combine those things. This was when VR and AR were the current thing. I was involved in a lot of VR and AR projects.

After a while, I realized, “While the tech is cool, the market isn’t there.” Looking back now, that was the right decision. But doing all that work, I met a lot of interesting people – among them Professor Matthias Niessner, who ended up becoming my co-founder. He’d been an associate professor at Stanford where he did a paper called Face2Face, which was the first time the world saw these kinds of technologies. When I saw that paper, I felt like I saw magic for the first time. It was simple. When we look back at it today, it’s not impressive because the world has moved on, but it was a neural network producing photorealistic video in real time back then. I couldn’t let it go and I started forming this thesis that as these technologies get better and better, the marginal cost of producing content is going to go to zero – not just in dollars, but also in time and skill required.

We’ve seen this before in text. You can write and distribute a book from your bedroom; you don’t ever need to leave your computer. In music – which is my big passion outside of work – we can sample, we have virtual instruments, and you can recreate almost any song using your MacBook. I felt that was going to come to video as well, and this was the technology that would unleash it, creating a massive economic opportunity to build a company and also democratize creativity, which was exciting to me.

The second part of the thesis was that when we can code and program video, that will change video as a media format. That’s slowly happening now. When we can program video, we don’t have to make it linear where we all watch the same video like we do on YouTube or Netflix.

Video is going to become interactive and personalized. It’s going to be something very different from what we know. The best way of thinking about that is to compare a physical newspaper and a website: in spirit, it’s the same thing – a page of information – but on a website, it can be updated in real time. You can’t do that with a newspaper. You can have a comments field. You can’t do that in a newspaper. With one click you can navigate to millions of pages. It can be personalized. There are so many things you can do on a website that you can’t do with a newspaper. And while it’s still early, that’s the way the world is going and that’s the opportunity we saw back then.

Evan: We believed in this vision, but most people felt it was contrarian. What percentage of people got it or agreed with you versus saying, “No, it’ll never happen”? This is one of the challenges many startups face in the early days. What was that feeling back then?

Victor: This was before generative AI was working. It was a contrarian opinion, and most people didn’t get it. It wasn’t fun for the first three years. We were waiting for the technologies to work and hoping they would become good enough to do something useful before we ran out of money. So it wasn’t a fun time, but looking back now, maybe the timing was perfect. We survived long enough for the technology to start working and for the world to get on board with the thesis. It’s been a fun ride since then.

Evan: Tell us a little bit about how you and Steffen reached out to – and inspired – Mark Cuban to invest. What was your first financing before we invested?

Victor: We had to raise some money. Building a company like this is expensive. I was 26 at the time and Steffen was the same. As you know, we didn’t have impressive CVs. We hadn’t been at Facebook or Uber or doing AI research for years. A lot of people saw two guys with a crazy idea and a PowerPoint deck. That’s probably why we got turned down by almost 100 investors. Nobody got it. To some degree, I can see why.

We had a long list of people we thought would be interested. Mark Cuban was one of them. He fit well in the Venn diagram between people who understand media and technology at the same time. We wanted to get in touch with him and you need the email address to do that, right? His email address wasn’t publicly known, but we knew he was on Shark Tank. We also knew that Sony got hacked a few years earlier. Steffen, my co-founder, downloaded all the emails that got leaked in that hack. In that dump, we found his email. We sent him a message and he responded within five minutes – and he has a Gmail address, believe it or not. I remember telling Steffen, “There’s no way this is real. You’re taking the piss.” He’s like, “No, it’s actually real.” Then we had a 10-hour-long email conversation with him. We never spoke on the phone, never met him. He asked a bunch of questions and ended up investing.

The lesson there was that he had implemented Face2Face himself at home. We didn’t have to convince him of the thesis I mentioned before. He was on board with that; he was looking for a team to fund. With the other investors we were talking to, we had to start with, “Hey, this big thing is going to happen in the world.” If you’re going to invest, you’d first have to believe that and then believe we were the right team to do it. Whereas with Mark, it was more of a punt that we could be the ones who’d do something interesting in that space.

Evan: It’s a great hustle! Can you tell the audience a bit about the state of the product back then and how it compares to the product now?

Victor: The first thing we tried to do was build technology for video professionals – agencies, production studios, Hollywood, etc. They would be the obvious people to sell AI video technology to. We built an AI dubbing solution, which was like: take a video recorded in, say, English and translate it into Spanish by replacing it – putting a new voiceover – and then animating the face to match the new voice track. This was before voice cloning worked at scale. This was before these technologies were stable. It only worked if you looked straight at the camera.

There were so many constraints around this. We found out quickly that while it did a few good campaigns, it was obvious that the product wouldn’t scale. The path there would’ve been to build a visual-effects studio with proprietary technology. That could probably have been a decent business, but that wasn’t the ambition we set out with.

So we said, “Okay, we’ve built this technology – what else can we use it for?” We went around and spoke to hundreds, if not thousands, of people to understand: what is video, why do people make videos, who’s making video today and why? Who’s not making videos but would love to? That last category became more and more interesting, because we met so many people who were interested in making videos but weren’t doing it. They worked in a corporate job, couldn’t get a budget from their boss, didn’t know how to use a camera and couldn’t use editing software.

All these people were saying, “Give me some way to make video without having to use a camera.” When we looked at what they wanted, a lot of it was talking-head style videos. That led us to the idea of avatars. Creating a talking-head video is much easier than doing an entire Hollywood film with someone fighting or flying out of an airplane. It’s a much more constrained domain. So we built technology to create these talking-head avatar-style videos.

A few AI avatars that you can find on the Synthesia website.

The insight was that for these people who weren’t making videos, they were making text – they were making PDFs and PowerPoints – and they wanted to make videos. So we only had to be better than a PDF or a PowerPoint. We didn’t have to be better than “real video” used by Hollywood studios or production companies. The quality threshold was different. That’s how we figured out that avatars were the right way. It unlocked all the world’s PowerPoint users to become video creators. Since then, we’ve built tons on top of it, but that was the fun starting point.

Evan: Let’s jump to now and talk about today’s use cases. Talk a little bit about some of the huge companies and how they’re using and benefiting from Synthesia today.

Victor: Today, we’re the biggest AI video platform for the enterprise and we help PowerPoint creators become video creators. We help you not just with creating the video, but with all the collaboration around it and the publishing. Create the video, edit it with our PowerPoint-style interface, collaborate with your colleagues and publish it using our proprietary video player – everything connects together.

With the current state of the tech, we see a lot of training use cases. For many people, “training” means L&D. That’s not the case at all. Training can be low-value, like compliance – and that’s certainly a use case – but training can also be high-value: training your customers on how to use your product, why your product is better than competitors in a product-marketing sense, customer support. Across the business, wherever you’re relaying information or creating how-to content, that’s where Synthesia comes in.

It’s clear that today, people want to watch and listen to content – they don’t want to read as much. We provide a way to create content at the same speed and scale as text or PowerPoint, but the output is video.

One of my favorite use cases: Zoom uses Synthesia to train a thousand salespeople all over the world.

Evan: How many languages do they do that in? One of my friends runs a billion-dollar business and recently said they did it in dozens of languages to train their network of team members.

Victor: It works in 140 different languages. The great thing about these videos is that they’re kind of like a Word document. You can create it once, then open it up and edit something in it – replace sections, take a section out, add a section. That’s something you can’t do with ‘real’ video. That was one of the surprises for us. We were focused on the front end of creating the content initially – the cameras and all that stuff, which is of course an annoying process. What we found was that a lot of the frustration comes after you’ve shot the content: someone didn’t do their line properly, they said something wrong, or you changed the name of the product during the editing process. All those things are much easier to solve when you use AI.

Evan: What are some of the newest features you plan to introduce for Synthesia users worldwide?

Victor: The big thing that’s coming now is making these videos interactive and personalized – evolving video from a pure broadcasting format. Increasingly, as a customer, you’ll interact with the products you use through video interfaces. If you have a problem, instead of going to a text-driven knowledge base, you’ll go to a video interface. In plain voice, you’ll explain your problem and these video agents will take in what you’ve said and explain, “Hey, Evan, this is why your bill was higher this month than last month. This number went up; something else happened,” etc. You can resolve those kinds of things live. It could also be learning more about how a company or a product works.

You’ll go into interfaces that are more conversational and personalized to you, as opposed to watching half-baked videos. It’ll take a while for the technology to get there, but that’s the direction this space is moving. For us, that’s exciting because it means we can become the mediator for many more touchpoints. When everything runs on our platform – including the actual video player – and the interface you use as a consumer to interact with a company, we can build a much more cohesive experience around what is essentially communication.

Every year, we bring together an impressive lineup of speakers – from tech giants to under-the-radar startups, esteemed research labs and leading venture capital firms. Come join our 12th Annual LDV Vision Summit on March 11, 2026 – RSVP to secure your free ticket.

Evan: Back when you started, “generative AI” wasn’t even a term, and now there’s so much hype. What keeps you up at night about AI’s future, and what are you doing to stay ahead and deliver more value to customers?

Victor: What we’ve always done well, if I have to say so myself, is focus on what we call utility over novelty. AI is driven a lot by splashy demos and exciting new things. Customers – both consumers and businesses – are willing to part with their money to try new AI things, and that’s great! We want lots of experimentation but it can also be a false signal because we’ve all seen the hype cycle.

When I started in VR/AR back in 2016, everyone was convinced that was the future. VC money was pouring in; people were creating all sorts of things. But 2-3 years later, everyone tried those things and said, “This is a crappy experience. It’s fun to try once, but I don’t want to do my work sitting with a VR headset on.” These are what I call innovation potholes. We’ve been good at avoiding those – chasing the latest fad because a customer says, “We want avatars in VR; I’ll pay you $300,000 to do it.” You have to ask “why” five times and figure out whether people are solving a real problem or doing something interesting they haven’t thought through.

The best way to think about it as a company today is that the land has become a lot easier with AI because everything is flashy and interesting and people will try it out but the real vote is the renewal. If you’re selling B2B software, that’s 12 months later. If it’s a consumer software, a couple of months later: how many people are churning? If your churn rate is 30-40%, you may have created something cool, but not something enduring. That’s how we think about it.

The other part: there’s so much emphasis on technology. We love talking about models. I always say that smart people talk about models, but customers talk about problems, workflows and the apps they need. We’ve built an entire platform and workflows around it. A lot of that isn’t as interesting as the AI itself – it’s the boring things you need – but together those small, boring things create a cohesive experience.

Fireside chat between Evan Nisselson, Founder and General Partner at LDV Capital, and Victor Riparbelli, Co-Founder & CEO at Synthesia

Evan: The two key things there are focus and the platform built on top of brilliant tech. As the company has grown with more customers and a larger platform – how do you stay focused? How do you make those decisions as you’ve evolved as a CEO?

Victor: You want to enforce a good bullshit filter in your company. If you work in tech, you’re surrounded by bullshit all the time. Everyone is always “killing it.” New products are always going to solve the next big thing. There’s a lot that’s great about that – it’s a good foundation for the industry but most of it is bullshit. Most of what people were saying five or ten years ago didn’t turn out to be true.

You have to be good at finding the signal in the noise. In hype cycles, it’s easy to look at competitors – they just raised $15 million and are doing something – and think, “Maybe I should be doing that as well.” Sometimes you should – that’s the hard part – but a lot of times, you shouldn’t just because someone else is. That’s something you can try to enforce culturally.

On a practical level, focus on utility. A lot of people in AI videos are trying to build for Hollywood – the cool things, “let’s make films.” I’m totally for that and it’s going to happen at some point. But the harsh truth is that none of this stuff is good enough yet. It’s getting there now. The last 2-3 years it’s been things like 6 fingers, no continuity, the same actor twice. Yes, you can make fun music videos and meme content and maybe even get to $20-30 million of ARR, but the real acid test of AI video is when people just create videos and don’t tell anyone they’re AI-generated because it’s useful, not just fun.

Working with a technology that people find magical is amazing, but it can easily lead you down paths where you run in circles or, worst case, waste resources.

Evan: I remember in the early days, after we started collaborating, you’d be up most of the night handling support – the nitty-gritty part of it. That’s probably where you learned what customers needed and where the bullshit factor was. Now you have a bigger team, and that focus has helped you succeed. Recently, you raised a significant round from NEA and other top investors. What have you learned through each fundraise that you could share as advice for other entrepreneurs?

Victor: Apply the BS filter again. 99.9% of VCs are lemmings who do what other people do and say and that’s okay. That’s how the world works. It’s a model that kind of works. Be realistic about it. Outside of building a great company with great fundamentals, vision and product, in the fundraising process you want to engineer urgency and that lemming effect. Try to do your raises quickly and in a structured way. Make sure everyone gets an answer in the same week. Don’t fundraise over 3-6-8 months.

When we met you, I’d been fundraising for 9 months and had made every mistake in the book.

Evan: I remember that first meeting. You had the passion you still have today, but it was late in the day. From your perspective, how did that meeting go?

Victor: We were frustrated at that point. We felt we had something real, but no one understood it. I still speak fast, but back then maybe I had 10% more than I do now. I remember you stopped me halfway through the meeting: “Victor, can you slow down like 15% so I can hear what you’re saying?”

Evan: And so you could breathe! I was worried you were going to pass out. And then Steffen was leaning back in his chair and almost fell off laughing, because I guess nobody had ever said that. I was fascinated.

One of the questions I love asking: What are the best and worst personality traits of an entrepreneur?

Victor: I’d say the best is having a strong bullshit filter. That’s important. When I meet founders who are better than me, they’re good at seeing through the noise.

The worst is probably the opposite: jumping on bandwagons and following consensus opinions. If you’re following consensus, you’re too late or making decisions based on incomplete data, relative to whatever the current consensus is.

Victor Riparbelli, Co-Founder and CEO at Synthesia, at our 6th Annual LDV Vision Summit in 2019: Can Synthetic Media Exponentially Scale Video Production Globally?

Evan: Now for investors: what are best and worst personality traits?

Victor: Best: being highly sociable and likable, because that’s what matters in winning deals. Least good: being an asshole because reputation spreads quickly and you’ll close off deals for yourself. The social aspect is undervalued. In many ways, VC is about winning deals, and that’s driven by humans.

Evan: Building a business is a roller coaster and every stage is a different chapter. In our regular calls and breakfasts in London, I always ask, “What’s keeping you up at night? How are you doing as a person?” People build businesses and it’s important to find inspiration in different places. Where do you find yours?

Victor: I find a lot of inspiration in movies and sci-fi. I’m tired of reading about AI. Of course I read about AI, but there’s so much noise! What I think is interesting to study right now is what SaaS looked like 10-13 years ago when everything was on-prem and the first SaaS tools started working. What were the patterns of the companies that made it through? We’re in a new era – a different type of software. Instead of looking at what other AI companies are doing, it’s more interesting to look at what great SaaS companies did 10-12 years ago. It’s not the same – things change but there’s less fog of war. Those stories have played out; you know who the winners and losers are.

There’s so much noise – people raising money left and right, propping up valuations, all sorts of random things. It’s difficult to tell who’s doing well versus who’s just getting propped up or doing hype. There are more lessons looking backward – especially in a hypey space. What is a good business? A good business solves a workflow. It’s not about the technology itself; it’s the human connection: meeting customers and building deep relationships. The models and AI stuff are great, but you learn more by looking back at what makes a great business now. Five years ago it was about the models – how to get to something that works and the opportunities that would create. Those are more obvious now. Now it’s about building great, enduring businesses and you want to learn from the past more than the present.

Evan: It’s one of the reasons I read a lot of biographies and books about successful people and people who’ve had challenging lives. Those help me evaluate the future and opportunities to build valuable businesses.

Evan: You had a fascinating TED Talk recently. The topic was: “Will AI make us the last generation to read and write?” How is this possible and what role does Synthesia have in that evolution?

Victor: I managed to piss off a lot of people with that because people don’t want this to happen. They think it’s a bleak future, which I understand. I’m not saying people will never read or write again. I don’t think that’s the case, but I made it provocative on purpose to get reactions.

My thesis is simple: if you look at how people prefer to consume information today, it’s video and audio. Especially for people who are 18 or younger – they want to watch and listen to their content. That trend is only going to continue. My provocative statement was: what if at some point we’re not reading and writing at all? We’re only watching and listening. The way we send messages won’t be typing them out – we’ll record ourselves, use avatars, whatever.

The thing that will enable this is that creating video and audio content becomes as easy as text, which it isn’t today but it will be soon. I also addressed the guilt associated with this. I love reading, but I read less and less. When I want to learn a new topic, I start on YouTube and maybe TikTok. I feel like I should sit and read the 200-page book in my armchair – do something “good and intellectual” rather than swipe on my phone. I don’t have the answer, but why is that? A lot of books are overly dense. There are too many pages. You can’t write a book that’s 30 pages long, even though it should be – publishers want 150.

Evan: It’s also not interactive – it’s a linear story. There’s a benefit to that, but when I get a newspaper randomly, I read it and get bored because I can’t interact, click a hyperlink, or jump around.

Victor: Exactly! Maybe at some point we’ll look back at reading and writing as inferior ways of communication. In text, so much information is lost. By definition, the world around us is complex and meaningful and deep, and you can’t convey all that in text. Just like hieroglyphs and cave paintings – technologies we had in the past aren’t a thing today – maybe communication will evolve into something different. People think it’s dystopian; I get why. But if we can do richer communication at the same scale and speed we can today, I don’t think it’ll reduce people’s ability to share ideas. It will make us more creative and interesting. It’ll probably take a while to get there, though.

Sphere, Sands Avenue, Las Vegas, NV, USA. ©Andri Aeschlimann, Unsplash

Evan: I agree and we’re biased at LDV – over the last 12 and a half years we’ve believed that visual tech and visual communication will far surpass text communication. Which visual tech do you hope exists in 20 years?

Victor: In 20 years, it’s almost hard to imagine what won’t exist, given the pace of innovation. But as someone who loves art, culture, film and music, I’d love a technology that’s immersive like VR/AR – but a great, social experience.

I went to this venue in Las Vegas last week, and it was one of the best media experiences I’ve had in a long time but it requires a $2.1 billion dome in Las Vegas, and I’m not fond of going to Las Vegas. Anything like that – making you feel media in a different way is powerful.

For me, that was a huge aha moment. There’s something there. A headset doesn’t do the same thing yet. AI doesn’t do the same thing yet. But I’m sure the way we go to concerts – or what cinema is today – will become a different immersive experience. I hope that happens, because the ability to use our imagination and take ourselves to new universes is such a fundamental part of being human.

Evan: What’s your advice for first-time entrepreneurs who are just starting?

Victor: If most people believe in something, assume it’s wrong. That’s not always true, but especially with new, fast-moving technology. I also saw a comment here that “90% of this tech is used for bad things in porn,” and people have been saying that for five or six years – that’s wrong from a numbers perspective, even though there are negative consequences.

You have to tune out dogmas that come from people just believing things without examining them. Most new technologies arrive with biases – people are afraid of them, and for good reason; we should, of course, be careful about the tech we develop. But there’s power in seeing through that filter.

If you want to build a venture-scale business, you have to see around corners. That requires trying to distinguish dogma from first-principles thinking. You can train yourself to do that. If you’re in a room where everyone agrees on something, maybe challenge it when you get home and see if there are more interesting perspectives.

Evan: Fantastic advice, Victor! We appreciate you joining us today and sharing your wisdom with the audience. It’s an honor to partner with you. The whole team at Synthesia is genuine, passionate and determined to create value. We wish you continued success at Synthesia and in everything you do!

At LDV Capital, we are honored to have played a very small part in their incredible journey which continues to evolve every day. Hope you enjoyed this interview as much as we did. Here’s what Victor said about participating in our 11th Annual LDV Vision Summit: “It was an honor to have a fireside chat with Evan at their 11th annual LDV Vision Summit. The Summit is always a great gathering of entrepreneurs building the next generation of AI businesses. LDV Capital has been a great backer since leading our Seed round in 2019.”

You might also want to check out the following articles:

Computer vision & machine learning trends in 2025: from autoregressive token prediction to agentic AI, physics engines and more

Visual tech & AI advancements in materials science: structuring new material discovery & development

Take a look at the keynote addresses from Victor Riparbelli, Steffen Tjerrild, Dr. Lourdes Agapito and Dr. Matthias Niessner at our Annual LDV Vision Summit over the past few years.

We are thrilled to invite you to our 12th Annual LDV Vision Summit (virtual) on March 11, 2026!

We’re thrilled to announce the first few speakers:

Dr. Jan Erik Solem, CEO and co-founder of Stær, is building robots that are truly autonomous, able to map new environments, understand space, plan their movements and continuously improve. Most recently, Dr. Solem was director of engineering for Maps at Meta following the company’s 2020 acquisition of his startup Mapillary, where LDV was the first investor.

Julia Hawkins is a General Partner at LocalGlobe & Latitude with a focus on health and deep tech. Prior to LocalGlobe, Julia worked at Universal Music, where she established the company’s corporate venture arm and led investments in ROLI and Sofar Sounds, among others. Before that, she worked at Goldman Sachs, Last.fm, and BBC Worldwide.

Dick Costolo is the Managing Partner and Co-Founder of 01 Advisors. Prior to 01 Advisors, Dick was CEO of Twitter from 2010 to 2015, having joined the company as COO in 2009. During his tenure, he oversaw major growth and was recognized as one of the 10 Most Influential U.S. Tech CEOs by Time. Before Twitter, Costolo worked at Google following its acquisition of FeedBurner.

Marina Temkin is a venture capital and startups reporter at TechCrunch. Prior to joining TechCrunch, she wrote about VC for PitchBook and Venture Capital Journal. Earlier in her career, Marina was a financial analyst and earned a CFA charterholder designation.

Join our free event to be inspired by cutting-edge computer vision, machine learning and AI solutions that are improving the world we live in!