“The Future of Seeing” by Dr. Daniel Sodickson: How Imaging Is Transforming Medicine, Science and Society

/Dr. Daniel Sodickson is a physicist in medicine whose career has been devoted to developing new ways of seeing. He serves as Chief of Innovation in Radiology at NYU Grossman School of Medicine, where his pioneering work continues to push the frontiers of medical imaging. A past president and gold medalist of the International Society for Magnetic Resonance in Medicine, Dr. Sodickson is also a Fellow of the U.S. National Academy of Inventors, recognized globally for his transformative contributions to MRI and imaging science.

Dan Sodickson at LDV Capital's Annual General Meeting in 2023: The Visual Tech & AI Experts Building The Future. ©Robert Wright

As an expert at LDV Capital since 2021, Dr. Sodickson started speaking to our portfolio company Ezra and then later joined Ezra as chief scientific advisor. Dr. Sodickson has long been a thought leader on the future of imaging and AI. At our 5th Annual LDV Vision Summit in 2018, his keynote, The Rebirth of Medical Imaging, explored how artificial intelligence and inexpensive sensors would revolutionize medical imaging by moving beyond imitating the eye to emulating the brain – unlocking entirely new dimensions of information to better understand ourselves and eradicate disease.

We were honored to feature him in our article, Why These 11 Brilliant Professors & Researchers Are Focusing on AI, where he shared his perspective on which sectors stand to benefit most from advances in AI and visual technologies over the coming decade. We also collaborated with Dr. Sodickson on our LDV Insights report, Nine Sectors Where Visual Technologies Will Improve Healthcare by 2028 (2018), where he contributed his perspective on the future of imaging and healthcare. His insights were further highlighted in Evan Nisselson’s articles, Healthcare by 2028 Will Be Doctor-Directed, Patient-Owned and Powered by Visual Technologies and “Your bathroom Will See You Now”.

Dr. Sodickson’s book, “The Future of Seeing: How Imaging Is Changing Our World”

In his book, “The Future of Seeing: How Imaging Is Changing Our World”, he traces humanity’s long quest to illuminate the invisible – from the first biological eyes that evolved in Earth’s ancient oceans to the telescopes, microscopes and MRI machines that map worlds beyond the reach of natural vision. Blending history, science and imagination, he reveals how imaging has reshaped medicine, altered economies and reframed our understanding of privacy and identity. Now, on the brink of an AI-driven revolution, Dr. Sodickson invites us to consider a future in which technology emulates not just our sight, but our very minds – changing how we see the world, each other and ourselves. Dan’s vision aligns with our fund’s thesis dating back to 2012.

We had the privilege of reading a few chapters in advance of his publishing date and here are some questions we asked Dan that we hope will inspire you to read the book in full, because it’s absolutely worth your time and its ideas embody the same bright future of visual technologies and AI that we wholeheartedly believe in.

Interview with Dr. Daniel Sodickson

Evan: Visual tech & AI is revolutionizing nearly every industry. From your perspective, how has medical imaging evolved over the past few years? What capabilities exist today that you couldn’t have imagined a decade ago?

Dan: In recent times, both the hardware and the software of medical imaging have been in a state of remarkable creative flux. First of all, the field has been shifting from a “bigger is better” to a “less is more” ethos. In an effort to improve the accessibility of medical imaging, enterprising academic labs and companies around the world are building low-profile prototypes of traditionally bulky and expensive imaging devices like MRI machines and CT scanners. This trend is enabled in part by AI. AI can take incomplete data and fill in what is missing based on what it has learned from large numbers of examples. This capability allows images of modest quality emerging from accessible imaging devices to be “rescued.” It also promises to enable even more striking advances, which will allow imaging to move from imitating the eye to emulating the brain.

Evan: Could you explain what that shift means for the future of the field?

Dan: Eyes are our raw visual sensors, and many imaging devices have taken inspiration from how biological eyes work. But our brains also play an outsize role in vision. Indeed, the world we see is in many ways a lie. The brain fills in what is missing using previously acquired knowledge of how the world works. Emulating the brain will enable some remarkable new capabilities in artificial imaging. For example, with AI we can give our medical scanners a memory. My research group recently published work on using prior scans to enable reconstruction of state-of-the-art images from dramatically less or dramatically worse data than is normally required. This means that the more we see you, the faster we can scan you. It also means that we can use accessible devices – cheap MRI machines or even wearable sensors – as interval scanners to detect change. My group has also combined prior images and blood test results with current images to reduce false positive rates in prediction of the risk of future disease. This means that the more we see you, the better we can predict your health. I would note that these uses of AI are different from typical “downstream” applications of AI in imaging, which take existing images and process them as humans might. Instead, I think of these approaches as “upstream AI,” which allows us to change the data we gather, and even the machines we use to gather that data. I believe that new imaging hardware, outfitted with upstream AI, will ultimately allow us to change the role imaging plays in our lives, making artificial imaging as continuous as biological vision, and making healthcare more proactive, predictive, and protective.

Dan Sodickson at our 5th Annual LDV Vision Summit in 2018, delivering the keynote, The Rebirth of Medical Imaging.

Evan: Your book “The Future of Seeing: How Imaging Is Changing Our World” traces vision from the first organisms in the early oceans to the rise of AI-powered scanners and even environments able to sense the smallest changes in our bodies. What inspired you to write this love letter to visual tech?

Dan: Whether we realize it or not, we are all creatures of imaging. We navigate the world using vision and our other senses to map out what is where. We carry cameras in our pockets and consume countless hours of visual content. And yet, in the modern era, the mechanisms of imaging are arguably more hidden than ever. Advanced imaging devices like telescopes and MRI machines have become the domain of specialized experts. I wanted to give imaging back to everyone. I wanted to share the story of how we humans came to see in extraordinary new ways, and how each new means of seeing ended up transforming science, society, and everyday life. I also wanted to alert people to the still newer ways of seeing that are emerging in today’s world, promising once again to transform the way we live, and also the way we understand the world, one another, and ourselves.

Evan: You describe “type 2” scanners of the future as cheap, answer-oriented and drugstore-ready. How do you see them fit into the current healthcare ecosystem?

Dan: I see these “type 2” scanners of the future as early warning systems, like those we deploy to alert us to dangerous weather. Such scanners don’t need to have top-of-the-line image quality – they just need to be able to detect changes from individual baseline states of health. Then, whenever the warning flag is raised, there is a natural handoff to the current healthcare system, which is optimized for detailed diagnosis and treatment. It is well known that when you catch diseases like cancer early, they are less expensive to treat and are also far easier to cure.

Evan: Could ‘type 3’ scanners, embedded in our environments, blur the line between healthcare and everyday life? What excites you most about this idea, and is there anything that concerns you?

Dan: Absolutely. I firmly believe that healthcare in the future will be continuous rather than episodic. Rather than being reactive, triggered by symptoms or other signs that often come too late, healthcare will be proactive. I believe that in the not-too-distant future we will be able to build something like a Google Maps for health: a suite of tools that know where you’ve been, that are aware of the landscape of possible threats, and that can guide you to your desired destination of healthy longevity. People are hungry for this kind of guidance, as is evidenced by the burgeoning interest in companies like Function Health and Ezra, with which I have been working closely. I believe that in the modern era of AI and Big Data, we are now in a position to deliver such guidance, and it is high time we got started!

As for concerns, there are many – for example, how we protect medical privacy in an era of continuous health monitoring, and how we avoid “crying wolf,” raising warning flags only when they are merited. What excites me, though, is that robust answers to many of these questions are beginning to take shape. We are beginning to learn that individual medical data points only make sense when they are taken in context, and that context-aware decision systems can tell us when information is truly actionable.

Dr. Sodickson’s vision perfectly aligns with our belief that visual technologies and AI are – and will remain – the engines driving humanity’s greatest innovations. It’s the same thesis that we have had at LDV Capital since 2012.

Evan: If scanners of the future can spot symptoms, diagnose and even “sound alarms” on their own, what role will physicians play in interpreting and acting on those alerts?

Dan: There will, I think, be a natural handoff between machine and human. Detecting a worrisome change is a far cry from finding a definitive diagnosis and designing an effective therapy. Some day, these tasks too may be guided or even performed wholesale by AI. By that time, though, I fully expect that a new ecosystem of human jobs will have developed. This is what happened in the wake of the industrial revolution – entirely new classes of jobs were created to minister to the machines – and with the huge current investment in AI, I think we are seeing something similar.

Evan: Current VR/AR tools mostly mimic natural vision, offering overlays to the existing reality. What would it mean to move beyond realism and introduce entirely new signals into our perception?

Dan: I think it would mean opening ourselves up to new perspectives. Imagine sending signals from an X-ray camera into a headset, or even piping such signals directly into our brains’ visual centers. This would give us X-ray vision. One can also imagine incorporating signals from a cloud of distributed sensors, which would expand our sense of self to incorporate potentially large swaths of space. To embrace some science-fiction tropes which are inching their way towards real science, one could even imagine sharing neural signals from other humans, which would enable a new kind of concrete empathy. We still have some way to go in making such new ways of seeing practical, but many of the building blocks already exist today.

Excerpt from Dr. Daniel Sodickson’s book “The Future of Seeing: How Imaging Is Changing Our World”

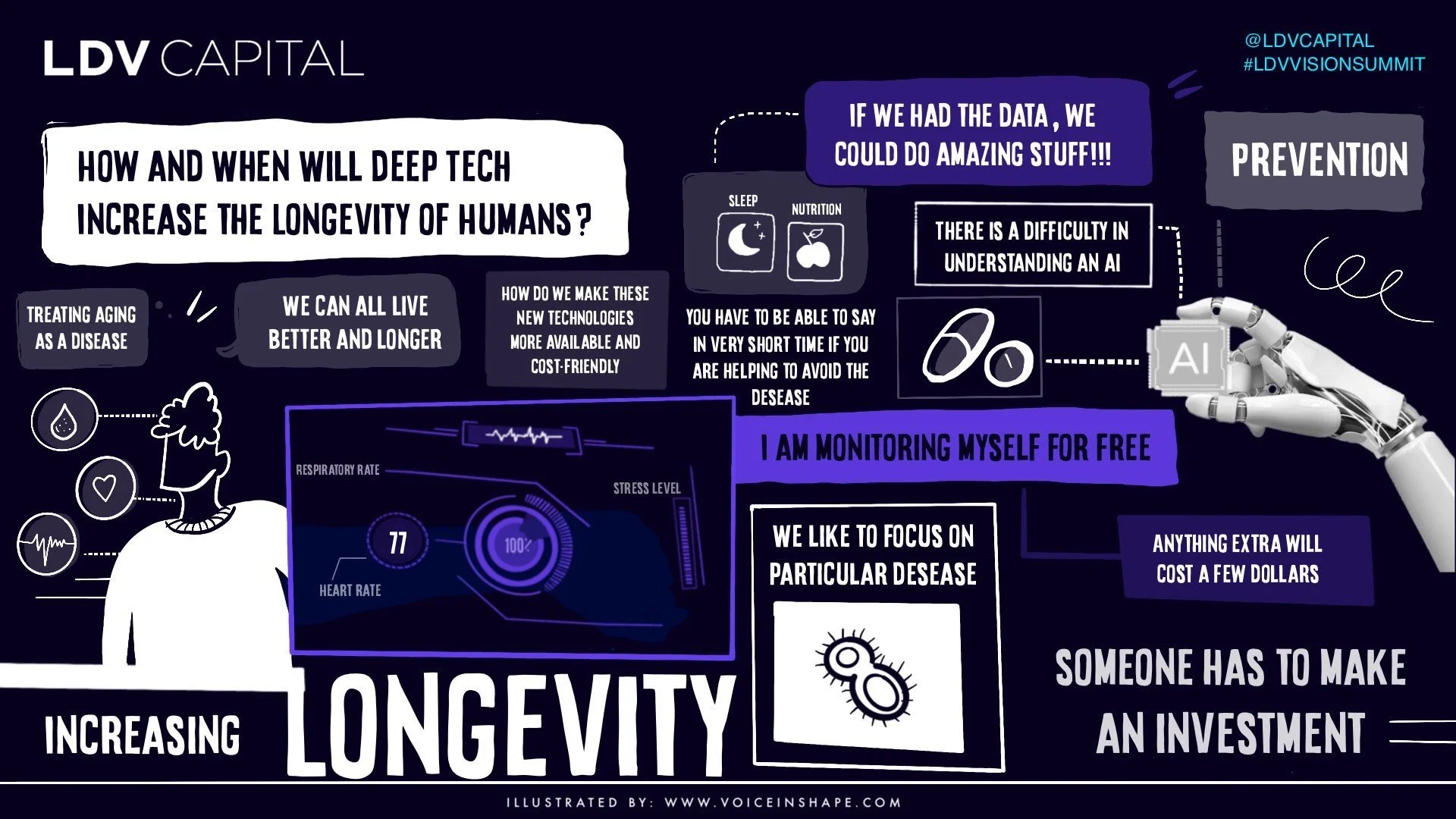

In 2019, we hosted a fireside chat with Thomas Reardon, computational neuroscientist and CEO of CTRL-labs, alongside LDV Capital’s Founder and General Partner, Evan Nisselson. Just four months later, Facebook acquired CTRL-labs for about $1 billion.

“CTRL-Labs, a brain-machine interface start-up acquired in 2019 by Facebook (now Meta) has developed noninvasive sensors that can pick up nerve signals traveling through the wrist and repurpose them to control computer displays, external devices or artificial limbs. If the reverse process could be mastered, then we could send new signals along existing sensory channels, or even directly into the brain, effectively hijacking biological inputs to create new artificial overlays. This is a challenging problem but one that scientists specializing in brain–machine interfaces are already poised to tackle.

My imaging colleagues Kim Butts Pauly and Shy Shoham are part of a research community working to change the behavior of neurons in the brain from the outside using focused ultrasound.

Other researchers are developing rudimentary implantable devices including artificial retinas and cochlear implants largely aimed at providing partial replacements for impaired senses of vision or hearing. Such interfaces could one day be fed not merely with coded visual or auditory signals but with ultrasound or x-ray signals. Our brains would have to learn the meaning of these signals, but once they did, we would be able to see through ordinary objects. Some distant variant of x-ray vision might one day be a superpower we all can share.”

This excerpt is adapted from The Future of Seeing: How Imaging is Changing Our World, Chapter 12. Copyright (c) 2025 Columbia University Press. Used by arrangement with the Publisher. All rights reserved.

Shy Shoham, Co-Director at Tech4Health Institute, Professor at the Department of Ophthalmology, and Professor at the Department of Neuroscience and Physiology at NYU Grossman School of Medicine, at one of our LDV Happy Hour events in 2023. ©Robert Wright

Evan: Could technologies like artificial retinas or focused ultrasound shift from therapeutic tools to enhancements for healthy individuals?

Dan: There is clearly a lot of R&D to do beforehand, but once safety and efficacy are demonstrated, why not? The entire history of artificial imaging is arguably a history of perception-enhancement. Bringing such enhancements to our own bodies would in a sense just be a matter of coming home – of coming back to our senses.

Evan: You suggest that new imaging signals could expand our “envelope of awareness.” How might this change our sense of self and our relationship to our environments?

Dan: In his 1855 poem, Leaves of Grass, Walt Whitman wrote “I am large, I contain multitudes.” He described a radical broadening of perspective to encompass more than just the limited vessel of the self. I believe that there is a technological analogue. I believe that we will, increasingly, be able to see things literally from other perspectives. At the same time, some of the same technological developments will also enable us to block out signals that don’t interest us, and to focus just on what we want to see. We have already seen this tension between information access and information bubbles play out in modern electronic media. As I discuss in Chapter 12, I believe that the tension will only increase, and will lead us to some key decision points for our societies and our species.

Excerpt from Dr. Daniel Sodickson’s book “The Future of Seeing: How Imaging Is Changing Our World”

“Mary Lou Jepsen is a celebrated inventor and former Big Tech executive who founded a start-up called Openwater in 2016. That same year, she visited our NYU imaging center for a workshop on the changing face of imaging. In her lecture on imaging systems of tomorrow, and in many subsequent talks in high-profile venues, she laid out Openwater’s mission of using light not merely to image the brain but to map brain function in real time. One expressed goal of this work is to allow people to communicate directly mind to mind, without the bothersome intermediary of words. With virtual reality and its variants (technologies whose development she oversaw at Facebook), one can already see what someone else is seeing, but what Jepsen envisions is something else entirely—a sharing of perspective that extends beyond the merely visual.”

This excerpt is adapted from The Future of Seeing: How Imaging is Changing Our World, Chapter 12. Copyright (c) 2025 Columbia University Press. Used by arrangement with the Publisher. All rights reserved.

At our 8th annual LDV Vision Summit, we had the privilege of hosting a fireside chat titled “How to Make Moonshots into Moon Landings & Other Tales in Deep Tech Entrepreneurism” between LDV’s Evan and Dr. Mary Lou Jepsen.

Evan: You discuss Mary Lou Jepsen’s vision of direct brain-to-brain communication. Even if mind-reading is far off, what incremental steps toward “empathy technologies” seem realistic?

Dan: Here’s one small step: in recent years, researchers have taken functional MRI signals from a person looking at a picture, fed those signals into an AI model, and produced a rough replica of what that person was seeing. This is just an early inkling of what might become possible one day, which is as terrifying as it is intriguing. On the one hand, we have the prospect of radical empathy; on the other hand, the final death of privacy. As technology develops, we will have some choices to make…

Evan: Finally, if readers remember one key message from The Future of Seeing, what do you hope it is?

Dan: Imaging is like breathing. We all do it without giving it much thought. But imaging has a long history of transforming not only the way we see but also the way we think. There are more, and arguably greater, transformations coming our way in times to come – in healthcare, in social interaction, and in the everyday life of everyone on the planet. So keep your eyes open!

We hope you enjoyed this conversation as much as we did – learning about X-ray vision, “Google Maps for health” and upstream AI left a lasting impression on us. If it did the same for you, order your copy of The Future of Seeing. Dr. Sodickson’s perspective mirrors our belief that visual technologies and AI have been, and will continue to be, the driving force behind humanity’s next great leaps – a thesis that has guided LDV Capital since 2012 and remains our thesis into the future.