Machine Perception Will Eat the World

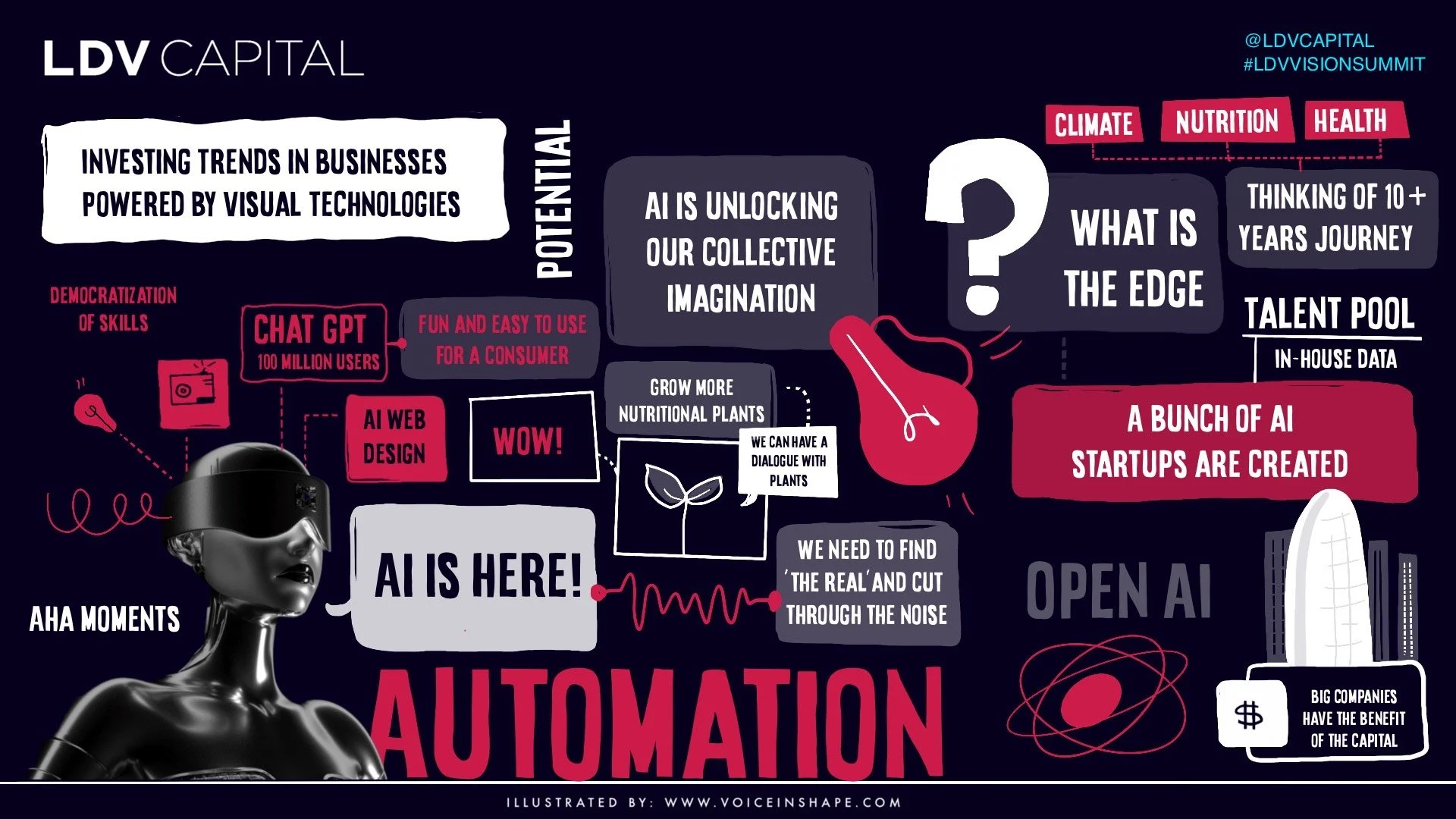

/LDV Capital invests in people building businesses powered by visual technologies and AI. We thrive on collaborating with deep tech teams leveraging computer vision, machine learning, and artificial intelligence to analyze visual data. Join our Annual LDV Vision Summit – a premier global gathering of people in the visual tech sector – to discuss cutting-edge AI solutions aimed to improve the world we live in.

Two decades ago, Marc Andreessen wrote that software is eating the world. Over the next 10 years, a more specific phrase will describe what is happening: machine perception is eating the world.

In the late 1980’s I had the lucky timing to watch the first autonomous vehicle drive around Schenley Park, the moving mirrors of its microwave-oven-sized LiDAR clearly visible through a glass cover.

In the decades since I have been patiently waiting for machine perception to become a practical reality. Not the kind of machine perception that analyzes mortgage applications or finds cancer in MRIs, although those are fascinating. I mean the type of machine perception that lets a car, or any device, sense and comprehend its environment in a way that mimics sentience. I am waiting for machines that can understand and navigate their environment at least as well as a well-trained dog to be part of everyday life.

The DARPA Grand Challenge moved machine perception for vehicles from research to the cusp of commercial reality. The DARPA challenge drove software developments and also spurred large-scale investment in new types of sensors – “active" sensors that, unlike “passive” cameras, are not reliant on light from the environment.

Now there are dozens of companies working on machine perception specifically for autonomous cars. Automotive companies, startups, as well as tech giants that a few years ago had no interest in the low-margin, last-century transportation industry are all working on the sensors and software to achieve machine perception.

With all this capital and brainpower focused on autonomy for one specific type of machine, should we be preparing ourselves for a time in the near future when machine perception will be a practical reality?

When this new technology arrives, will it first appear in your car? Or will it change your life in other ways first?

Automotive is a difficult initial target market for machine perception. The penalties for failure are high, the regulatory burdens are significant, the technical requirements are challenging, the operating environment is chaotic… I could go on, but you get the idea.

Let’s ignore the automotive and transportation industries as initial target markets for machine perception, at least for a moment. Yes, it's a huge industry, offering huge scale, but with VW, Ford, Tesla, Google, Apple, Aurora, Lyft, every automotive supplier, 80 other companies I could list, and perhaps even Domino's Pizza competing for at least a piece of the solution, it’s difficult to identify clear winners at any investment level, and definitely not at the early stages. On such a large scale, with competition come low margins. Perhaps those companies are racing towards a future where, if they are successful, the number of vehicles will shrink dramatically.

At some future date for many people owning a car may make as much sense as owning an elevator or train car.

Are there simpler environments to test out the fusion of new sensor and software technologies?

If so, when and where will machine perception penetrate and change society first? How big can these opportunities be? What business opportunities will this new technology create? Will any of these new opportunities be addressable by startups?

To answer these questions we can take the capabilities being developed for automotive and project performance umbrellas coupled with costs, and then see if any interesting value propositions can pop up.

The first set of assumptions we have to make is what types of sensor technology will be used by these future machine perception systems?

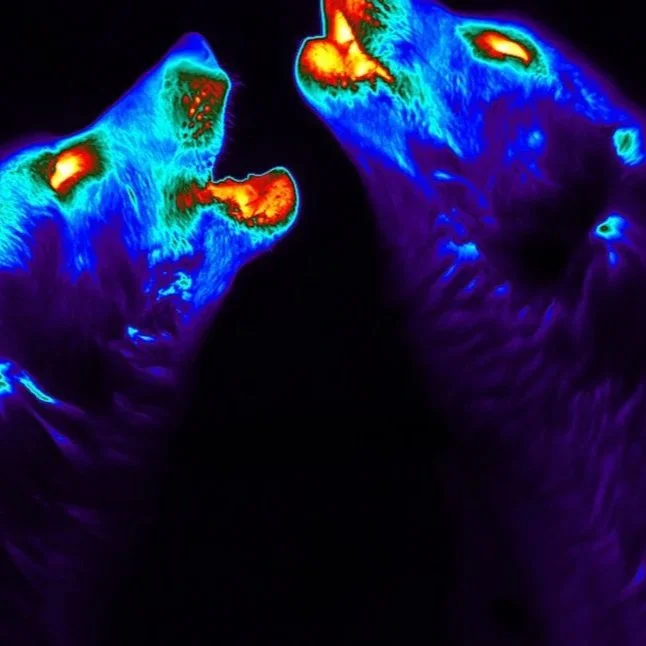

CMOS sensors have made visual cameras inexpensive, and inexpensive computing power turns cameras into amazing automated perception machines. Yet passive cameras have limitations in real-life situations with uncontrolled lighting. Some type of active sensing is required for those moments when a passive optical system, i.e. a camera, visible light, or otherwise, is blinded. Some kind of 3D sensing is necessary to turn a complicated, imperfectly reflected scene into a solvable geometry problem.

Active sensors like LiDAR and RADAR work where cameras don’t and one or both of these active sensors are vital for every autonomous car project. Unfortunately, RADAR and LiDAR are currently too large and expensive for mass-market high-quality perception machines.

Well over 100 companies are working on automotive-grade LiDAR or RADAR. Since August 2020 we have seen half a dozen LiDAR startups go public, with a combined market cap of over $10B. That is a lot of public market value creation around a technology nobody has ever heard of! Only two of these companies have commercially available products. Several more RADAR and LiDAR SPAC-IPOs are in the works.

If we assume there is a predictive signal in large numbers of investment decisions, then according to these companies IPO presentation by 2025 we should expect a $500, 20 Watt, 10 x 10 x 10 cm active sensor with specs good enough to enable a multi-ton vehicle to navigate safely at 80 mph. Let’s throw in an integrated IMU, GPS, and camera system for free, as they are practically free now. Add a $500 high-end processor capable of real-time scene analysis good enough for an automotive application, and thus by 2025, we will have machine perception of complex environments for $1000.

It's difficult to assess the current price of a comparable system, but adding up the parts you see on self-driving demo vehicles can easily get you to $100K. Assuming some price reductions for quantity manufacturing, perhaps a realistic price comparison for today’s machine perception systems might be $35K.

“Ubiquitous machine perception system capable of analyzing complex scenes in uncontrolled lighting” is an unwieldy term for a blog. I am going to give it a name. I will call it “Shirley”.

Whenever you have a new technology, an old technology that makes sudden leaps in effectiveness, or the combination of existing technologies in a new way that yields nonlinear improvements in some way, you have bursts in the number of opportunities for new products and services. Shirley is dependent on the combination of sensors, software, and embedded or edge computing power. Shirley has been evolving for decades but it is about to fall off a cliff in terms of cost, making Shirley available for implementation in many situations where it is currently unthinkable. This is a great opportunity for startups and investors, so let’s start thinking about it.

Value propositions for Shirley can be broken down into two categories. Shirley will enable a machine to scurry around a complicated, uncontrolled environment, or Shirley can help a machine track objects scurrying around a complicated, uncontrolled environment. Many applications will make use of both value propositions simultaneously but it's still helpful to identify problems by isolating the value that Shirley brings.

In the first category, we can lump all the mobile applications regardless of size or purpose. We already see early Shirleys being applied to ADAS in cars and mobility systems like Zoox, Nuro, and May Mobility, but applications also exist in construction, mining, airports, plant logistics, factory operations, and any situation where people are currently operating vehicles inside or outside.

If Shirley becomes a competitive addition once its price is 1/20th of the total sale price of a device, equipment becomes ripe for automation if their sale price is $20,000 for a $1000 Shirley. That includes most of the machines that currently require human drivers.

At our 2021 Shirley price of $35K, that would imply only machines with a sale price of roughly $700K are worthy of automation. At that price, the operational cost of machines, or the cost of avoidable human error, drives adoption, which is why we currently have giant autonomous mining vehicles, but not personal cars.

At 2021 prices there might still be opportunities for Shirley-based automation. Factories, shipyards, industrial construction, and airports all offer high-value equipment whose utility may be limited by human operators. They are also relatively controlled environments where errors or downtime are expensive, but where failures may be less costly than a software-generated pile-up on the 405 or Long Island Expressway.

While the sensor package and software might be a little different, boats and aircraft are already highly automated and should probably be completely so.

Boats and aircraft have so much more room to maneuver without risk of collision, and unlike with cars, human senses require augmentation by radar, GPS, etc to operate boats or planes in normal commercial situations. Automation in a situation where human senses are inadequate in the first place is to be expected. Humans do not belong in cockpits, and I say that as someone who loves being a pilot.

First-generation Shirley machines, which I will call “Shirl-Es” in a Pixar reference, will look a lot like current ones. They will likely include a cockpit or controls that let a human operate them. Second-generation Shirl-Es, or innovative first-generation ones, will not have any human controls. They might be smaller or bigger than the first-generation, but be better at their functions than Shirl-Es that have to waste size and materials on anthropocentric controls.

When I was exploring automated farm equipment about a decade ago I learned that tractors from companies like John Deere or Caterpillar were getting bigger, with cabs that offered more functionality, even as the tractor functions themselves were becoming highly automated. It was not that farmers did not trust automated tractors, nor did farmers think their job was to drive the tractors. After interviewing 40 specialty crop farmers I learned that farmers did not want to be driving tractors. If they had to be in a tractor, farmers wanted automation and comfort so they could use it as a wireless office to do other work, work that had nothing to do with being in a tractor.

When I showed farmers my sales pitch for smaller, non-human-drivable automated tractors, complete with renderings, they loved the idea at the right price point. A 3-1 price improvement over their next potential tractor purchase consistently sold. This corresponded with a three-machine install of $50K small Shirley-tractors to displace a $450K traditional tractor purchase. In specialty crop farming, migration to larger numbers of automated machines operating on a per acre basis, at a lower capital cost per acre, is likely to follow automation.

Similar migrations will happen in any facility where people are driving around devices now. Construction and plant equipment will get smaller, more devices will be used per unit work, but capital and operating costs over non-Shirley work sites will decrease as quality and precision increase.

Interesting opportunities and innovations will arise when industries are using fleets of these Shirley-enhanced devices. A dozen small devices might work together in ways that will seem bizarre to a passerby but may accomplish things far out of proportion compared to what is possible today. New opportunities in asset management, process automation, and application-specific development will open up, not necessarily dependent on or tied to specific device manufacturers. Without investments in software services, hardware manufacturers will not be able to meet a long tail of customer needs, leaving much of this opportunity to smaller, innovative companies.

Shirley may usher in an environment similar to the early days of the personal computer, where Bill Gates and Mitch Kapor were able to create lasting and temporary business empires, but on the backs of forklifts and load haulers rather than on the screens and hard drives of Intel 80x86 based personal computers.

How will Shirley make an impact beyond mobile machines?

Beyond automating mobile devices, Shirley can let machines “see” and monitor a scene. Mobile robots are cool but monitoring complicated scenes, regardless of lighting, is a value proposition that might lead to faster adoption cycles for Shirley, and ultimately have more impact on our economy.

At $1000 per install, Shirley’s implementation in a monitoring role will be limited to high-value installations. Protecting people from hazardous devices in plants and factories, and securing borders or remote infrastructure assets like power stations. Perhaps some high-value smart city applications can be addressed at a $1000 price point.

New sensor technologies and less expensive compute will inevitably drive the price of Shirley lower. At $100, any $2000 consumer device can become Shirley-fied. Consumer devices in your home, like smart-TVs, will have built-in, highly accurate 3D monitoring capabilities, for minority-report-like device control, but also AR-gaming. These opportunities should be addressable by startups.

When Shirley gets to $100, it will also impact consumer-facing mobile robotic applications, for both outdoor and indoor applications. Autonomous mobile robots will be hauling our groceries and luggage.

When will Shirley get to $100? Is that even possible? Well, the short answer is likely to be yes. The critical path to making that happen is innovation in either machine perception software or sensor technologies. It's unlikely for some unknown technology to radically improve embedded computing power within the next 5 years.

Many technologies are currently being developed, by startups, of course, that have the potential to radically reduce the costs of active sensing.

This is a graph of possible Shirley pricing over the next decade. Note that the y axis is logarithmic, so the curve is much steeper than it looks. If we start at our current $35K Shirley pricing and apply the usual pricing patterns of semiconductor technology, we get to a $100 Shirley by 2030.

The blue line shows what happens if a new sensor or software technology enters the market that offers the potential to bring that $100 Shirley price five years earlier, displacing existing technology represented by the black line. There are several contenders for “blue line” innovations, one of them being LiDAR-on-a-chip company Voyant Photonics.

Voyant Photonics has developed a revolutionary small LiDAR on a chip with the capabilities of softball-size LiDARs

As Shirley travels down a non-linear price and performance curve it becomes applicable to applications that do not make sense now, applications that no one is even thinking about. In 2000, when CMOS image sensors were new, who was expecting mobile phones, or social media, to be the primary means for consumers to experience imagery? Think of all the investment opportunities that have been instigated by that penetration of CMOS-based cameras between 2005 and 2010 (Instagram, Pinterest, etc). The same could be said of the Internet in 1996, or the Personal Computer in 1986. The commercialization of Shirley will be no less important when looking back in 2026.

I don’t know what these new applications and opportunities are. My mind is not that flexible anymore. I do know that the best ideas do not make sense now when humans have to operate vehicles when devices cannot understand their environments. Continuous last-mile package delivery? Roombas cleaning city streets at night? Remote patient care? Immersive, outdoor augmented reality?

Let me know your thoughts, check out job opportunities at Voyant Photonics and reach out if you are speeding up the Shirleyfication by building perception systems and visual technologies.