We're Taking Billions Of Photos A Day—Let's Use Them To Improve Our World!

/This keynote is from our 2015 LDV Vision Summit, it was also published in our LDV Vision Book 2015.

Pete Warden, Engineer, Google

©Robert Wright/LDV Vision Summit

My day job is working as a research engineer for the Google Brain team on some of this deep learning vision stuff. But, what I'm going to talk about today is actually trying to find interesting, offbeat, weird, non-commercial applications of this vision technology, and why I think it's really important as a community that we branch out to some weird and wonderful products and nonprofit-type stuff.

Why do I think we need to do this? Computer vision has some really deep fundamental problems, I think, the way that it's set up at the moment.

The number one problem is that it doesn't actually work. I don't want to pick on Microsoft because their How-Old demo was amazing. As a researcher and as somebody who's worked in vision for years, it's amazing we can do the things we do. But if you look at the coverage from the general public, they're just confused and bewildered about the mistakes that it makes. I could have picked any recognition or any vision technology. If you look at the general public's reaction to what we're doing, they're just left scratching their heads. That just shows what a massive gap in expectations there is between what we're doing as researchers and engineers and what the general public actually expects.

What we know is that computer vision, the way we measure it, is actually starting to kind of, sort of, mostly work now -- at least for a lot of the problems that we actually care about.

This is one of my favorite examples from the last few months, where Andrej Karpathy from Stanford actually tried to do the ImageNet object recognition challenge as a human, just to see how well humans could actually do at the task that we'd set the algorithms. He actually spent weeks training for this, doing manual training by looking through and trying to learn all the categories, and spent a long time on each image. Even at the end of that, he was only able to beat the best of the 2014 algorithms by a percentage point or two. His belief was that that lead was going to vanish shortly as the trajectory of the algorithm improvements just kept increasing.

It's pretty clear that, by our own measurements, we're doing really well. But nobody's impressed that a computer can tell them that a picture of a hot dog is a picture of a hot dog. That doesn't really get people excited. We really have not only a perception problem, when we're going out and talking to partners and talking to the general public and talking to people. The applications that do work tend to be around security and government, and they aren't particularly popular either. The reason this matters is not only do we have a perception problem, but we aren't actually getting the feedback that we need to get from working with real problems when we're doing this research.

What's the solution? This is a bit asinine. Of course we want to find practical applications that help people. What I'm going to be talking about for the rest of this is just trying to go through some of my experiences, trying to do something a little bit offbeat, a little bit different, and a little bit unusual with nonprofit-type stuff -- just so we've actually got some practical, concrete, useful examples of what I'm talking about.

©Robert Wright/LDV Vision Summit

The first one I'm going to talk about is one that I did that didn't work at all. I'm going to use this as a cautionary tale of how not to approach a new problem that's trying to do something to help the world. I came into this with the idea that...I was working at my startup Jetpac. We had hundreds of millions of geotagged Instagram photos that were public that we were analyzing to build guides for hotels, restaurants, bars all over the world. We were able to do things like look at how many photos showed mustaches at a particular bar to give you an idea of how hipster that particular bar was. It actually worked quite well. It was a lot of fun, but I knew that there was really, really interesting and useful information to solve a bunch of other problems that actually mattered.

One of the things that I thought I knew was that pollution gives you really, really vivid sunsets. This was just something that I had embedded in my mind, and it seemed like it would be something that I should be able to pull out from the millions of sunset photos we had all over the world. I went through, I spent a bunch of time analyzing these, looking at public pollution data from cities all over the US, with the hope that I could actually build this sensor, just using this free, open, public data to estimate pollution and track pollution all over the world almost instantly. Unfortunately it didn't work at all. Not only didn't it work, I actually had worse sunsets when I was seeing more pollution.

At that point, I did what I should have done at the start, and went back and actually looked at what the atmospheric scientists were saying about pollution and sunsets. It turns out it’s only at really high atmospheres, with very uniform particulate pollution -- which is what you typically get from volcanoes -- is what actually gives you vivid sunsets. Other kinds of pollution, as you might imagine if you've ever lived in LA, just gives you washed out, blurry, grungy sunsets. The lesson for me from this was I really should have been listening and driven by the people who actually understood the problem and knew the problem, rather than jumping in with my shiny understanding of technology, but not really understanding the domain at all.

Next I want to talk about something that really did work, but I didn't do it. This is actually one of my favorite projects of the last couple of years. The team at onformative, they took a whole bunch of satellite photos and they ran face detectors across them. Hopefully you can see, there appears to be some kind of Jesus in a cornfield on the left hand side, and a very grumpy river delta on the right. I thought this was brilliant. This is really imaginative. This is really different. This is really joining together a data set with a completely different set of vision technologies and shows how far we've come with face recognition.

But shortly after I saw this example, I actually ran across this news story about a landslide in Afghanistan that had killed over a thousand people. What was really heartbreaking about this was that the geologists looking at just the super low-res, not-very-recent satellite photos and the elevation data on Google Earth said that it was painfully, painfully clear that this landslide was actually going to be happening.

What I'm going to just finish up with here is that there's a whole bunch of other stuff that we really could be solving with this:

- 70 other daily living activities

- pollution

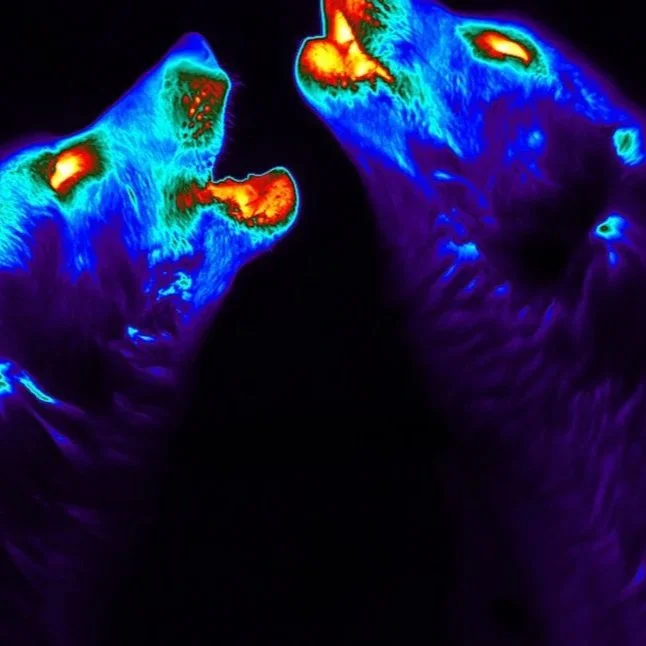

- springtime

- rare wildlife.

What I'm trying to do is actually just start a discussion mailing list here at Vision for Good, where we can bring together some people who are working on this vision stuff and the nonprofits who actually want to get some help. I'm really hoping you can join me there. No obligation, but I want to see what happens in this.