Accelerating Artificial Intelligence With Light

/LDV Capital invests in people building businesses powered by visual technologies. We thrive on collaborating with deep tech teams leveraging computer vision, machine learning, and artificial intelligence to analyze visual data. We are the only venture capital firm with this thesis. We regularly host Vision events – check out when the next one is scheduled.

At last year’s LDV Vision Summit, Dr. Nicholas Harris – Founder & CEO of Lightmatter – gave a keynote speech on the next-generation computing platform they are building for AI and its value for autonomous vehicles in the next 5, 10, and 20 years.

Nicholas holds a PhD from MIT, his doctoral thesis was on programmable nanophotonics for quantum information processing and artificial intelligence. Previously, he was an R&D engineer at Micron Technologies where he worked on DRAM and NAND circuits and device physics. He has published 55 academic papers and owns 7 patents.

Watch this 5-minute video or read the text below.

Moore’s Law is 55 and Still Relevant

You've heard a lot about the end of Moore's Law*.

*Moore's Law refers to Moore's perception that the number of transistors on a microchip doubles every two years, though the cost of computers is halved. Moore's Law states that we can expect the speed and capability of our computers to increase every couple of years, and we will pay less for them.

People have been talking about this for decades, and I'm here to tell you that that's not correct. That's not really the right way to think about things. And you even have people parroting this from Stanford and also happening to be NVIDIA at the same time, and Google, and Berkeley, they're talking about the end of Moore's Law. But really the problem isn't that you can't shrink transistors. You still can.

The problem is that when you shrink transistors to build your computers, they're no longer scaling in the amount of energy that they use. And this is a fundamental issue and it affects the performance of computers now and going forward. You could have had a computer in 2005. If you think back to what the specs probably were, you likely had a three gigahertz processor, a similar amount of RAM and so on. And today it's basically the same story.

Because the energy of transistors isn't scaling, we've got a few things that computer architects have done to try to keep these chips cool as they pack more and more transistors.

One of them is they've lowered the clock frequency so it's been stuck at a few gigahertz for a while.

The other trick that you can play is you can say, "All right, well my chip's too hot, so I'm going to stop packing compute elements. What I'm going to do is fill it with memory. Memory doesn't use a lot of power." But the downside is you're not building a faster computer.

And the third option, and this is the thing that you hear from Intel and the other chip makers like AMD, "Well, let's just 3D stack these things and have more density." Well, the problem is if you've got something that's really hot, stacking more things on top of it doesn't really help you get the heat out.

LDV Capital invests in deep technical people building visual technology businesses.

So you've got some issues, not with Moore's Law, but energy scaling in transistors, and this is happening at the same time that AI is really taking off. It's interesting to look at how much compute is required to train state-of-the-art neural nets. The amount of compute that's required can be quantified into a metric that's petaflop/s-day**.

**A petaflop/s-day (pfs-day) consists of performing 1015 neural net operations per second for one day, or a total of about 1020 operations.

Think of it like kilowatt-hours when you're at home. It's actually growing exponentially and the rate is five times faster than Moore's Law. So you're really not going to be able to keep up with this demand unless you have a new technology. And unless someone comes up with it, you're going to see this saturate.

Photonic Processors

Here you can see a photograph of one of Lightmatter’s prototype chips presented by Nicholas Harris at LDV Vision Summit 2019 © Robert Wright

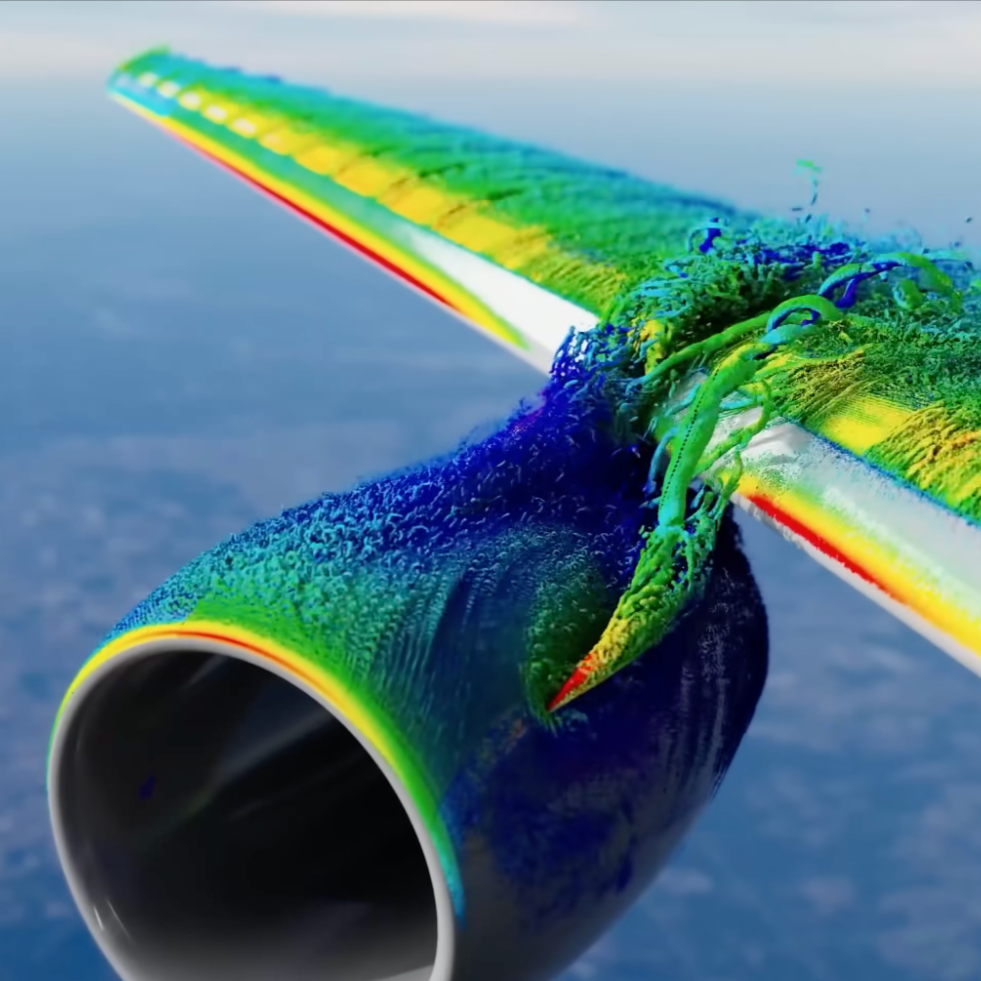

Around 2015, Google had a result on a new kind of processor called the tensor processing unit. And in this processor they had the insight that AI and deep learning are really underpinned by matrix multiplication. So why not just build a matrix processor? And these matrix processors are made of 2D arrays of multiply-accumulate units. And we can look at the specs of these things on an electronic system. For an 8-bit computer with an area of 50 microns by 50 microns, you use about five milliwatts, maximum speed 2 gigahertz.

Why did I talk about the area? Well, if you've been around the optics community and electronics people providing input on it, they typically talk about how optics are big, you can't really pack them in the same way as transistors and so on. But you really need to compare apples to apples.

Let’s look at the photonic elements that we build at Lightmatter. They are an optical version of the multiply-accumulate unit that's used in electronics and in Google's TPU. So it's the same area. The power is less than a thousandth and you can go at tens of gigahertz. So actually, the theoretical bandwidth for this system at our wavelength is 173 terahertz. It's quite fast. It’s a pretty interesting technology, that’s taking something that's like the TPU and plugging in optics into it, and you have the same trappings and interfaces.

What are the implications of this kind of technology? Our system is faster and more efficient – we are able to put out more compute for the same amount of power. And this will enable applications like autonomous driving going from the gap between L3 (vehicles are able to drive from point A to point B if certain conditions are met) and L5 (Full Driving Automation). And as cars are electrified, it becomes increasingly important that you're able to not take too much power from those batteries, because people want range in their cars. It's one of the main complaints. And the other implication is that you're going to be able to continue to power the growth of AI and new advanced algorithms and just continue to scale the technology.