Visual Data is the Crux of AI & Automation for the Future of All Industries

/Robots across all industries such as agriculture leverage cameras and visual technologies to accomplish their tasks in automated ways.

In an era marked by automation and artificial intelligence (AI), visual data is powering machines to perform like humans, or better than humans. Since the majority of the data that our brains analyze is visual, machines need to capture, analyze and learn from visual data.

The term “automation” first originated in the 1940s, when it was used to describe the trend of automating processes in the automotive industry. We are now witnessing the age of the fourth industrial revolution. Intelligent automation streamlines processes that otherwise consisted of manual tasks or were based on legacy systems, which can be resource-intensive, costly, and prone to human error. Automation is now revolutionizing all industries from healthcare and precision medicine to manufacturing and construction, to agriculture and sustainability. The size of the global industrial automation market is already greater than $175B, and by 2025, it will reach ~$265B.

Healthcare & Precision Medicine

Healthcare workflows are being digitized, AI is instrumental in improving the speed and efficiency of operations including diagnostics and drug discovery, and robotic automation is used for assisting with surgical procedures. Much of the digitized data is visual. To quote our LDV Capital Insights Report on Healthcare, “Visual assessment is critical - whether that is a doctor peering down your throat as you say “ahhh” or an MRI of your brain.” 90% of hospitals and healthcare systems now have an AI and automation strategy in place, up from 53% in 2019. In 2021, AI in the healthcare market was estimated to be worth around $11B worldwide, a figure forecasted to increase to $188B by 2030, at a compound annual growth rate of 37%.

Aggregating healthcare data has long been a bottleneck reliant on manual processes. Adopting secure cloud processes in order to anonymize health data contributes to developing better algorithms for scalable precision medicine. Vendor-neutral archive stores medical images and documents in a standard format so they are able to be accessed by other systems. Amazon launched Amazon Comprehend Medical, a natural language processing (NLP) service that uses pre-trained machine learning in order to understand and extract health data from medical text, such as procedures, prescriptions, or diagnoses. Using pattern recognition to identify patients at risk of developing a condition is another aspect where AI is being leveraged for data analysis and decision-making.

Next-generation imaging, computer vision, and miniaturization of devices have the potential to help diagnose disease before symptoms even appear. Advanced genomics, the sequencing of DNA, and phenomics, the analysis of observable traits (phenotypes) that result from interactions between genes and their environment, provide earlier insights into the onset of disease. Computer vision and AI are also playing a critical role in assisting radiologists to analyze and interpret visual data. According to Frost & Sullivan, the global medical imaging and informatics market was estimated to hit $37.1B in 2021, registering a growth of over 9% from the year prior. At LDV Capital, we invested in Ezra, enabling early cancer detection for everyone by using AI and MRI.

"Typically a full body MRI scan can take about two to three hours. Our machine learning approach enhances scans when they are acquired, automating the process to be 4x faster and providing a snapshot of up to 13 organs in just one hour. Applying AI to visual data will be the forefront of radiology and the huge endeavor towards screening a hundred million people a year," says Emi Gal, Founder & CEO of Ezra.

Radiologist vs AI detection of cancer in MRI scans ©Ezra

With next-generation sequencing, next-generation phenotyping and molecular imaging playing a major role in identifying biomarkers during the early onset of a disease, there is a tremendous opportunity to improve patient costs and outcomes while significantly decreasing healthcare costs.

The global market for surgical robotics and computer-assisted surgery is anticipated to grow from $6.1 billion in 2020 to $11.6 billion by 2025. Current open surgeries will be enhanced by new techniques and technologies. For instance, intraoperative imaging with stereotactic systems will provide higher-resolution real-time visualizations to surgeons while performing surgery. Image-directed radiotherapy will improve accuracy to further decrease the exposure of healthy tissue to radiation. By leveraging robotic assistants’ consistency, accuracy, and visualization abilities, surgeons can improve their workflows and operations, reducing both human error and uncertainties.

Carol Reilly, a serial entrepreneur and former employee at Intuitive Surgical, a company that pioneered robotic-assisted surgeries and is the maker of the Da Vinci system, was part of our Women Leading Visual Tech series. During her interview, she said she’d trust surgeons who use the Da Vinci robot regularly and have those skills. “It's got benefits such as hand tremor reduction, and magnified views, it can scale up and down your motion, so it could be more accurate and precise in a way that supplements the human. The surgeon is still in full control.”

The STAR Robot performs a laparoscopic surgery on the soft tissue of a pig without human help. ©Johns Hopkins University

Last year, researchers at Johns Hopkins University developed the Smart Tissue Autonomous Robot (STAR), a self-guiding surgical robot that can perform challenging laparoscopic procedures in gastrointestinal surgery. Justin Opfermann, a Ph.D. student in the university’s Department of Mechanical Engineering, said: “This is the first time autonomous soft tissue surgery has been performed using a laparoscopic (keyhole) technique. The STAR produced significantly better results than humans performing the same procedure, which requires a high level of precision and repetitive movement. This is a significant step toward fully automated surgery on humans.”

Investments in the healthcare & MedTech space make up 30-40% of our portfolio, including companies that are revolutionizing the health screening, fertility & mental health sectors through automation. Norbert Health has built the world’s first ambient health scanner that can measure the vital signs of anyone within a 6ft range and does not require any effort from the person being scanned. Placing a Norbert device in a patient’s recovery room, at a clinic’s entrance, or in a patient’s home can automate the process of taking vital sign measurements for monitoring. This reduces patient risk and stress on hospital staff and resources.

Norbert’s ambient vital sign scanner ©Norbert Health

"Automated and contactless vital signs and health scanning is a key enabler to the rapid transition of healthcare into the home, from inpatient to outpatient or remote care. We combine multispectral sensors, from mmWave to visible light, to accurately measure complex metrics on the human body. Our device also makes it possible to move traditional healthcare to preventive care,” says Alexandre Winter, co-founder & CEO at Norbert Health.

To date, tracking mental health has been an art, not a science. There are thousands of wellness and meditation apps available but none of them can track your mental health in real time. Earkick offers a revolutionary tool for measuring workplace mental health in real-time. By using machine learning models to decode a user’s emotional state, they create automated features to predict outcomes such as anxiety levels, mood disorders, panic attacks and other irregularities in behavior. Earkick then recommends in-the-moment cognitive behavior therapy-based mental health exercises informed by their emotion recognition and mood/anxiety prediction models.

"Earkick's AI automatically analyzes your current mental readiness with a multi-modal approach using various physiological signs. Being able to understand your mental health trends in an understandable way is key to empowering individuals towards the best mental readiness," says Dr. Herbert Bay, Co-founder at Earkick.

As inefficient, outdated traditional processes become digitized, much of the digitized data will be visual. Visual technologies will be influential for the future of personalized healthcare and precision medicine as applications of imaging, computer vision and AI shape a patient’s journey across prevention, diagnosis, treatment, postoperative recovery & continuing care.

Manufacturing & Construction

The manufacturing sector is facing a persistent skilled and unskilled labor crisis. In the US alone, 2.1 million jobs could remain unfilled by 2030, causing economic damages of up to $1T, according to a recent study by Deloitte and the Manufacturing Institute. Meanwhile, manufacturers face growing and changing demand from their customers. Driven by the rapidly expanding e-commerce sector, there is a trend toward greater product customization. Previously, traditional industrial processes would be characterized by expensive experts to program, less flexibility and a higher footprint. The future of manufacturing will integrate extremely easy-to-use, safe, flexible, and affordable automation supported by AI and standardized software and hardware interfaces.

As stated in our LDV Capital Insights Report on Manufacturing & Logistics, “the next five years, factories and processes will leverage visual sensors to reliably capture operating data and computer vision to unlock the insights within the data. Accurate, real-time visual data that can be analyzed to produce actionable insights will be a game changer for manufacturers.” Visual technologies will enable manufacturers to make innovative product breakthroughs, cut costs, increase productivity, and improve quality by eliminating errors and reducing variability.

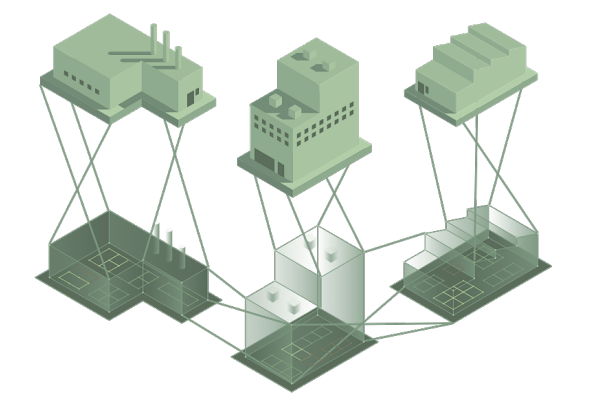

Digital twin illustration ©2019 LDV Capital Insights report

A digital twin is an interactive digital replica of an existing, physical entity or system that is continually updated in response to real-time changes. Visual technology is expanding the notion of the digital twin by supporting the virtual re-creation of entire aspects of manufacturing. Product design is becoming more accessible due to browser-based user-friendly 3D modeling software. With this will come deep, actionable insight into all layers of the manufacturing environment.

Highly accurate microscopes and advanced light-based technologies open the door to new artificially structured materials, such as metamaterials. Continued progress in spectroscopy and nanophotonics is pushing us towards super-resolution lenses and microscopes capable of providing insight into a wide variety of biological and chemical processes and structures. Light and laser direct deposition, photopolymer waveguide, and light-based scanning for ultra-accurate metrology are all necessary for the consistent additive manufacturing of prototypes that leverage advanced material properties.

Siemens, a German multinational conglomerate and the largest industrial manufacturing company in Europe, has taken advantage of the adoption of industrial automation and data analytics. Their AI-based analyses enable predictive maintenance, providing the basis for reducing downtimes and assuring a high level of quality through early anomaly/error detection already during the ongoing production process. Other businesses now rely on Siemens’ technologies to reduce machinery costs, improve consistency with automation and deliver instant data for quickly adjusting workflows.

An ultra-compact, affordable sensor module ©Voyant Photonics

Our LDV portfolio company Voyant Photonics leverages commercially available and scalable semiconductor fabrication processes that combine thousands of optical and electrical components onto a single chip. This enables the mass production of a LiDAR system with on-chip beam steering in a field-deployable package. The team’s goal is to create ubiquitous LiDAR for machine perception across all applications. Smart infrastructure is a key use case, as their sensors are well-suited to low-duty-cycle metrology for Internet-of-Things (IoT) applications, allowing for reliable, accurate, and persistent measurement of bridges, power lines, and defects across industrial facilities.

“Accurate 3D vision is essential for machines to understand and interact with the physical world. Mass-producing a complete LiDAR system on a semiconductor chip radically reduces the cost while increasing the reliability of machine perception, unlocking massive productivity gains in robotics, industrial automation & mobility. Think of the changes that came from vacuum tube computers to the computing devices we use today,” says Peter Stern, CEO at Voyant Photonics.

The construction industry has experienced decades of stagnant productivity growth. Over the past two decades, the sector’s annual productivity growth has only increased by 1%, lagging behind the world economy. In order for the construction industry to evolve, automation is necessary in many forms, from automating digital design and analyzing processes to the automated creation of construction documentation and on-site construction robotics. Automation increases the efficiency of a construction project, reduces the duration of laborious work, increases construction safety, and promotes the quality and consistency of work. Another company within the LDV portfolio, Gryps, connects construction data silos to deliver smart analytics to facility owners to increase their ROI. Their software platform leverages robotic process automation, computer vision, and machine learning without the need for manual data entry. A few of their major customers include The Jacob K. Javits Convention Center, Mount Sinai Hospital & the Hospital for Special Surgery, which are able to be more effective and reduce manual work when managing their capital projects thanks to Gryps automatically aggregating their data and providing advanced analytics.

“Through robotic process automation and AI, scattered data can be better collected and analyzed to deliver smarter insight to the project managers that would otherwise have to go through consultants and expensive custom software," says Dareen Salama, co-founder & CEO at Gryps.

Agriculture & Sustainability

Different sectors in food and agriculture that are powered by visual technologies ©2020 LDV Capital Insights Report

The global market size of smart agriculture is expected to grow from approximately $12.4B in 2020 to $34.1B by 2026. With the help of automation, the agriculture sector is seeing increased adoption of practices based on technologies such as AI, IoT, cloud computing, and robotics. As the population increases, more waste is generated, and climate change affects land use, innovation is imperative to ensure a cleaner, safer and healthier world. Visual technologies are powering key developments for agriculture and sustainability and will drive innovation across these sectors.

Developing a higher-yield plant breed currently takes 5-10 years and requires correlating gene data to a plant’s physical traits as it grows, also known as phenotyping. Phenotyping is predominantly a manual process done by skilled operators. Computer vision and machine learning are now enabling faster and more reliable indoor phenotyping through a wide range of imaging sensors, including multi-angle, 2D, 3D, RGB, fluorescence, near-infrared, thermal infrared and hyperspectral. Over the next 3-5 years, automated phenotyping will significantly aid breeders in correlating genes to physical traits. This will shorten the breeding cycle by two or more years, reduce breeding land use and enable a greater breadth of experiments.

AI-powered device Gardin provides crop optimization for indoor farms ©Gardin

Visual sensing can analyze crop needs with precision. Machine learning algorithms ingest drone, plane and satellite images of increased resolution and greater spectral range, further enabling remote agronomy. Moreover, equipment-mounted sensors measure soil parameters and plant characteristics in real-time. An LDV portfolio company Gardin is the world’s first remote plants insight platform. Through Gardin’s software, commercial farms can detect early signs of stress using Plant Health Index, detect tray variability using Plant Uniformity Index, and adjust lighting based on plant light use efficiency.

“As the data pool continues to increase, Gardin’s aim is to forever remove the inefficiencies of the traditional crop growing process, and provide automated systems that when managed sustainably, contribute positively to the health of our planet,” says Sumanta Talukdar, Founder & CEO at Gardin.

Lastly, advanced imaging techniques drive quality assurance (QA). Nearly a quarter of food product recalls are due to operational errors such as product contamination, foreign bodies, spoilage and unauthorized ingredients. These errors can be identified at the food processing and packaging stages of production. Machine vision will enable a more thorough QA process by improving efficiency during sorting and processing in packhouses and at food processors. Scanning systems combined with machine vision QA will lead to better detection of internal and external bruising, decay and dry matter. These technologies will augment legacy solutions, such as 2D x-rays, which are limited to large contaminants such as bone fragments and metals.

Conclusion

Implementing cameras, visual sensors & computer vision represents an opportunity for new businesses to grow and current companies to invest and improve. The adoption of visual technology innovations will continue to remain the crux of automation for the future of industry throughout the next decade and beyond. As the demand for workflow automation processes exponentially spikes, investing in computer vision, machine learning and AI will be the key enabler for how healthcare & precision medicine, manufacturing & construction, agriculture & sustainability, and many more industries innovate and evolve.

This post was written in collaboration with Ash Cleary, an analyst at LDV Capital.