Supercharging Creativity With Open AI’s Chat GPT-3, Codex, DALL-E and DALL-E 2

/Peter Welinder speaking at our LDV Vision Summit (May 19, 2015) © Robert Wright

Peter Welinder is the VP of Product and Partnerships at OpenAI. OpenAI is a research and development company focused on developing safe artificial intelligence in a way where it can benefit all of humanity. It was founded in late 2015 by Elon Musk, Sam Altman, and others, who collectively pledged US$1 billion.

Along with one of our long-time LDV experts-in-residence Serge Belongie, Peter is the former co-founder at Anchovi Labs. Anchovi Labs developed a platform to organize, browse and share personal photos. The company was acquired by Dropbox in 2012 and Peter joined the Dropbox team as an engineer and the head of the machine learning team. In 2015, he gave a keynote speech at our 2nd Annual LDV Vision Summit, “Building A Cloud-Enabled Home for All Your Memories” where he shared stories from building Carousel, Dropbox’s dedicated photos experience.

At LDV Capital, we invest in people building businesses powered by visual technologies. We thrive on collaborating with deep tech teams leveraging computer vision, machine learning, and artificial intelligence to analyze visual data. We are the only venture capital firm with this thesis.

In 2021, our team was researching the future of content creation and Peter Welinder was one of the experts we spoke to. Later that year, we released our report, “Content & the Metaverse are Powered by Visual Tech” and hosted a virtual event to present our findings, where Peter gave a keynote on supercharging creativity with AI. Watch his presentation or check out the shortened transcript below.

“Every human is inherently creative. We can create amazing things. If you look at children, they're incredibly curious and they create amazing stories, and they have so much imagination! As we grow up, we feel like we lack the skills to be good. We don't have the time to learn to play an instrument or to draw well, or we cannot feel less confident in our writing and so on. This ‘blocker’ prevents us from exploring different mediums for our creativity.

The models that we are developing at OpenAI can aid every human in their creativity. I will show you examples of three different models that touch on three different modalities – GPT-3, Codex & DALL-E. Some of these models are available through our API.

GPT-3

GPT-3 stands for Generative Pre-trained Transformer Version 3. Think about GPT as a giant language model. It's a big neural network that has over 175 billion parameters. It's been trained on big swaths of text found on the internet. You can give some texts to GPT-3 and then you get some text out. Let me show you a few examples of what that looks like and how it can then be used to aid human creativity.

You can ask GPT-3 factual questions. For example, you can what the human life expectancy is in the United States and get an answer. What you see in boldface is the text you give to GPT-3. The stuff that comes out at the bottom is what GPT-3 gives back. There's a response from GPT-3 in some sense.

With GPT-3, we can translate between languages. In this example, we ask the model to translate a sentence from English to French.

A text about Jupiter being rephrased by GPT-3 to a second-grader.

If you're an author, you'll probably hit writer's block at some point or you might want to get ideas on how to start a piece of text. You can ask GPT-3 to do that for you. Let’s request a paragraph for a children's story. You can try this with different genres – it’s a good way to explore concepts and ideas!

CODEX

This neural network translates natural language to code.

Instead of training this model on just text, we also train it on code. Code is a powerful, creative medium. There are many things you can create with code. You can create tools to create. You can supercharge creativity. If you enable humans that can't code or even help people code, to explore more ways of coding, you can help them to be much more creative.

As before we put in some text but in this case, instead of text out, you get code out. In this example, we asked Codex to create a snowstorm on black background.

On the right, you see a bunch of JavaScript code that's been generated by Codex. It creates the code to generate this snowstorm on the left. The model is able to take that fairly vague concept that is instructed in text and create a bunch of fairly complicated code to make that happen. Even if you might not have programming skills, the model can enable you to create things in code that you wouldn't have been able to create before.

DALL-E

A neural network called DALL-E creates images from text captions for a wide range of concepts expressible in natural language. It's able to draw things in different concepts and styles.

An example of a text prompt where you ask the model to create a storefront that has the word OpenAI on it. Sometimes it misspells the name but most of the time you get something that's good.

You can ask it, for example, to show a cross-section view of a walnut. As you can imagine, this bot has probably seen a lot of open walnuts.

You can also ask the model to combine concepts and create things that it has never seen in its main data. Here's an example of asking the model to create an armchair in the shape of an avocado. I would probably be down for buying at least one of these.

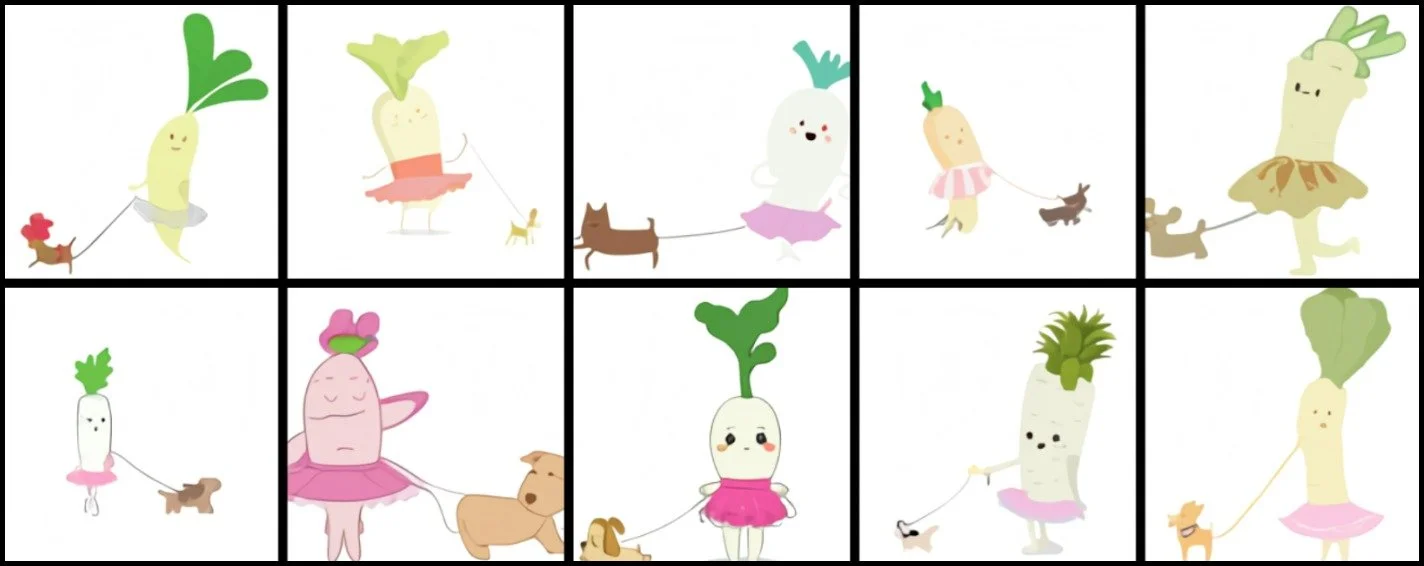

The model is skilled at creating such images from the concepts that you as a human can come up with. Here's an example of a more of an illustration of a baby diaper radish in a tutu walking a dog.

AI has the ability to supercharge everyone's creativity. It’s just the beginning and we'll see models like Codex, GPT-3 or DALL-E get even better. I encourage you to think about how you can use these sorts of models to supercharge your creativity in your organization.”

DALL-E 2

As expected, breakthroughs in visual tech tools are fueling the next evolution of content creation. Earlier this year, OpenAI announced DALL-E 2, claiming that it can produce photorealistic images from textual descriptions, along with an editor that allows simple modifications to the output. It can combine concepts, attributes, and styles. The model can add and remove elements while taking shadows, reflections, and textures into account. DALL-E 2 can also take an image and create different variations of it inspired by the original.

DALL-E 2 is slowly being rolled out to the public via a waitlist. We at LDV had a chance to test DALLE-2 and are thrilled to share a few examples below.

The LDV team asked DALL-E 2 to generate an image of a grizzly bear riding a mountain bike

The LDV team asked DALL-E 2 to generate an oil painting of a platypus playing volleyball

The LDV team asked DALL-E 2 to generate an impressionist painting of a person riding a mountain bike

The LDV team asked DALL-E 2 to generate a photo of a zebra in a straw hat in space

Previously, we touched on the topic of AI generating visual content and specifically visual metaphors in our Women Leading Visual Tech interview series. We spoke to Dr. Lydia Chilton, an Assistant Professor of Computer Science at Columbia University. She works on constructing visual metaphors for creative ads and tools that help to write humorous stories and news satire. Here’s what she says: “Creative visuals with motion drive even more clicks. In a world like TikTok and Instagram, if you don't have a short video or an image, no one is seeing your stuff. Everyone wants visual content but you can't use stock photography anymore. Everyone's seen it. It has to be special in some way to grab your attention.”