Can Meta-Learning Unlock the Magic of AI?

/Women Leading Visual Tech: Interview with Dr. Jane Wang, DeepMind’s Senior Research Scientist

LDV Capital invests in people building businesses powered by visual technologies. We thrive on collaborating with deep tech teams leveraging computer vision, machine learning, and artificial intelligence to analyze visual data. We are the only venture capital firm with this thesis.

A year ago, we started our monthly Women Leading Visual Tech series to showcase the leading women whose work in visual tech is reshaping business and society.

Our first guest was Dr. Timnit Gebru, former technical co-lead of the ethical artificial intelligence team at Google. To celebrate the anniversary, we invited Dr. Jane Wang, a senior research scientist at DeepMind – an artificial intelligence research laboratory founded in 2010 and acquired by Google in 2014 for $500M.

Our very first WLVT community event, “Can Meta-Learning Unlock the Magic of AI?”, was hosted on March 18th. We received an overwhelming interest in this event but had to hand-pick only 50 participants with the idea to discuss the topic in small groups led by our brilliant discussion leaders. You’ve met some of them in our series before – Esther Dyson, Dr. Gaile Gordon, Dr. Sujatha Ramanujan, Dr. Mackenzie Mathis! They were joined by Tate Ryan-Mosley, Sowmiya Chocka Narayanan, Dr. Fiona Hua, Dareen Salama, Rosanna Myers and our colleague Meryl Breidbart.

Jane was interviewed by Abigail Hunter-Syed, a Former Partner at LDV Capital. Following is an edited version of their discussion and the unedited video can be found below. (Note: After five years with LDV Capital, Abby decided to leave LDV to take a corporate role with fewer responsibilities that will allow her to have more time to focus on her young kids during these crazy times.)

Dr. Jane Wang is a senior research scientist at DeepMind.

Jane’s background is in computational and cognitive neuroscience, complex systems, and physics. She is interested in applying neuroscience principles to inspire new algorithms for artificial intelligence and machine learning.

She received a Ph.D. in Applied Physics from the University of Michigan in 2010. She is one of the UK’s rising stars in cutting-edge artificial intelligence research and one of the organizers of the Women in Machine Learning community.

Jane’s recent work focuses on reinforcement learning and meta-learning with fantastic successes, such as the recent release of Alchemy as well as a variety of recent papers in leading publications. In 2020 she wrote a post on her blog (which has amazing short stories) where she imagines that the most powerful AI models of the future will be indistinguishable from magic.

Abby: It's Women's History Month, and we're pleased you agreed to be interviewed at our very first Women Leading Visual Tech community event. I want to start the conversation by discussing your role models.

Jane: That's an important question because growing up in science or just in general, you don't have a lot of women role models in science and technology. You're reading through your science book – it's mostly men and you don't see yourself in their place as this person who will go on to do scientific discoveries...

A lot of my role models were people in my family. My mom, for instance, is a retired mechanical engineer. Her career choice made me realize that it wasn't something off-limits to me.

There've been so many female professors that have been amazing at their jobs but I might turn this around and say that I'm at the point where I'm inspired by young women that are starting out. They're fearless, they have so much energy, passion and curiosity, and they're already kicking butt in the stuff that they're doing. Just knowing that they're out there inspires me to keep going.

Abby: When did you first know that you wanted to be a scientist? Was it watching your mom as a mechanical engineer and being like, "Oh, that's awesome. I want to do that?"

Jane: Both my parents were engineers and I thought that it was a boring career at first. I wanted to be an astronomer because I loved looking at the stars and learning about space, and I was into science fiction. That's what first drew me to science. It was so powerful to be able to understand how things work. All the technology that can come out of being able to understand something fascinates me.

For instance, the fact that COVID-19 vaccines were developed and deployed in such a short amount of time is inspiring. I wanted to be a part of the science world and to understand how it was possible.

Abby: You spent your graduate and postgraduate work looking at human cognition. Specifically, if I understand right, memory and memory-based decision-making, and you used different imaging techniques, like fMRI (functional magnetic resonance imaging) and others. You even developed some experimental approaches to neural processes in cell cultures. How important was starting out seeing how the brain works for your research? And was that driven by this idea, "I want to understand how it works? I want to be a part of this?"

Jane: It was. That's a great question because it gets at the general framework of how you do science. For me, the first step of trying to figure out a problem, to analyze a data set, is to always get my eyes on the data because our brains are incredibly good at spotting patterns, and be able to allow us to ask interesting questions and, of course, to be scientifically accurate. Once you see those patterns and formulate a hypothesis, you should go out and collect more data. The first formation of those hypotheses has to come from you looking at the data and being curious and exploring what's there.

Abby: The way we think about it at LDV is that the vast majority of data that our brains collect and analyze is visual and so for AI to succeed, it's got to be able to analyze visual data as well. Let’s talk about how you started getting interested in looking at human cognition to develop a more effective framework for computer cognition. I've heard you say that it's two sides of a coin: neuroscience is trying to dissect the way that we think and how we analyze that data and AI is trying to engineer systems to analyze that data. How did you get interested in looking at both sides of the coin?

Jane: It was the natural progression of where my research interests led me. I went into neuroscience because I was fascinated by the way my brain works, how it problem-solves. At some point, you get to this gap where it's like, “we don't understand where these thoughts come from, how they arise from the substrate of what they're made of, these neurons and biological parts”.

You can specify in AI or computer programs how to do something logically but we have no idea how to bring it closer to the ways that human thought is formed.

I was always drawn to that gap. I was interested in brain-computer interfacing because I was obsessed with the idea of being able to transfer thoughts from my brain to the computer.

What is the program of human thought? I like to think of it as the software of the mind as opposed to the hardware, which is the brain and the substrates.

Abby: It's funny that you talk about brain-computer interface. We had Thomas Reardon from CTRL-labs at our Vision Summit a couple of years ago and it was remarkable to watch what they could do with the hardware. It's a great segue into talking about meta-learning. I'd love to talk about it at a high-level and understand how we can be applying this. As a foundation, you've talked about meta-learning as learning to learn. You stated that was the best definition in your recent paper in Behavioral Sciences.

Jane: For me, meta-learning or learning to learn is the same thing. It's the idea that you can learn or improve upon a learning process itself. To give an example, let's say you're learning a new programming language. The first time you do that, it's going to take you a lot longer than your second or third programming language because, at that point, you understand case structures, for loops, strings and data structures. There's all this stuff that you learned the first time about the learning process itself and it's a lot faster for you now to pick up that second, third, fourth language. This is true for a lot of things that you're learning.

Abby: One of the examples that I've heard you talk about is how we learn how to understand what is most important for the task at hand. Sometimes color is important but sometimes it's all about the shape. I watch my kids try to put multicolor pieces into a shape shorting cube toy and they quickly seem to prioritize shape over the color of the pieces. So, to your point, our brains learn how to rank features based on importance for the task at hand. You're trying to teach machine learning models how to do that as well. Do you think of it as significantly different from deep learning?

Jane: I think that meta-learning is orthogonal to deep learning. You can do meta-learning anytime. All you need is a learning process with parameters that themselves can be learned, and it doesn't have to be via neural networks or deep learning.

I consider evolution to be a form of meta-learning as well because your genes specify your neurons that themselves learn.

Anytime you have nested scales of learning for some parameter, you can adjust in your learning process, meaning can have meta-learning.

We were honored to have 75 attendees and this image shows a subset of our fantastic attendees.

Abby: You talk a lot about the different scales of learning. I'm assuming that evolution is the utmost scale of learning that's intergenerational. Could you break it down for us a little bit? What do you think about those different levels?

Jane: I think that learning is happening at multiple timescales all the time. Anytime you form a memory, you can do learning and that memory can then impact the way that you're learning something in the future.

We're constantly influencing our learning process as a result of things that we have learned in the past.

I almost feel like it's not that there are these binary or discrete scales of meta-learning.

In my work, I've identified common scales of meta-learning. It’s evolution, for instance. And then the way that your brain develops throughout childhood is the way that your neurons, the synapses connections are pruned. And then the way that the synapses themselves can strengthen or weaken as a result of experience. Those all happen at drastically different scales, and they all depend on those slower skills of learning as well. But that's not to say that there aren't other scales in there somewhere that are maybe happening at the same time or there are in parallel.

Abby: The immense learning that I take away from that is that our brains are doing amazing functions all at the same time that help us do everything from pick up our coffee cup to understand different trends and make sense of all the data as you were talking about earlier. One of the things that I've read about, and that I've heard you talk about too, is how bias starts to play a role in this. We learn certain things, and we start to have a certain bias, whether that's our preference of color or something along these lines. Is this a benefit to the way that humans think because it enables us to learn better? Or do you think that this is a downfall?

Jane: I think it's both. Inductive bias pops up because it is beneficial when you can make assumptions about the kinds of problems that you're going to be presented with or the environment that you're going to have to make decisions in. Having an inductive bias, essentially, it's an assumption that you can make that your environment is a certain way, and that helps you to learn and adapt much more quickly than if you didn't have those biases.

This is kind of like a two-sided coin. It’s similar to stereotypes, for instance. We usually consider stereotypes to be bad, but a lot of times, they have some nugget of truth in them, and they can help us to make fast snap-decisions. If we had to completely take all the information in an unbiased way and make a decision every single time, that would be difficult for us because we have limited amounts of time and information to make these decisions.

A question from the audience | Esther Dyson, Executive Founder of Wellville: One of the best and obviously, most exciting/interesting examples of evolution/learning right now is the COVID variants and how the fitness function that we represent is fostering rapid growth. I'd love to hear you talk about that and just have some insights.

Jane: That's a fascinating take. I hadn't thought about it in terms of meta-learning before. It speaks to the power of being dynamically adaptive to an environment that also adapts to you.

Interestingly, the spread of the COVID variants depended on not only the genetic variation of COVID itself but also on people's actions. In certain communities, people are practicing social distancing less often, and that's creating a positive pressure for these fast-spreading variants. The virus is learning to take advantage of our behaviors and the way that we're acting around each other and we are simultaneously adapting to COVID and what our government is telling us.

Esther: We're adapting as individuals and changing our behavior, but when we talk about COVID variants – the worst ones are surviving and the rest are dying off. It's an interesting divergence in the models. One is learning and the other is evolving.

Jane: You can consider evolution to be a form of learning as well.

Abby: What is our learning? What have we learned about the way that people respond to COVID and the way that it mutates, and how can we then apply that to our understanding of AI? How quickly did people adapt their behaviors or didn't adapt their behaviors? Is there an insight that we could take and apply to how we could help to engineer systems or computer cognition that we hadn't before?

Jane: Trying to frame this whole pandemic and our response to it in meta-learning terms, I would think that the meta-learning we're doing is how we, as a population, can respond to such pandemics.

I think we've taken a lot of teachings from what happened in 1918, the Spanish flu. We look to history to see what did work, what didn't work. We've adapted our strategies because of that. For instance, we knew that the Spanish flu caused cytokine storms and we had to be wary of our body's responses to COVID. I’m not an epidemiologist but I believe we took many lessons from that. It’s part of the reason we had such a great rollout of the vaccine developments. I think nobody expected it to be this fast.

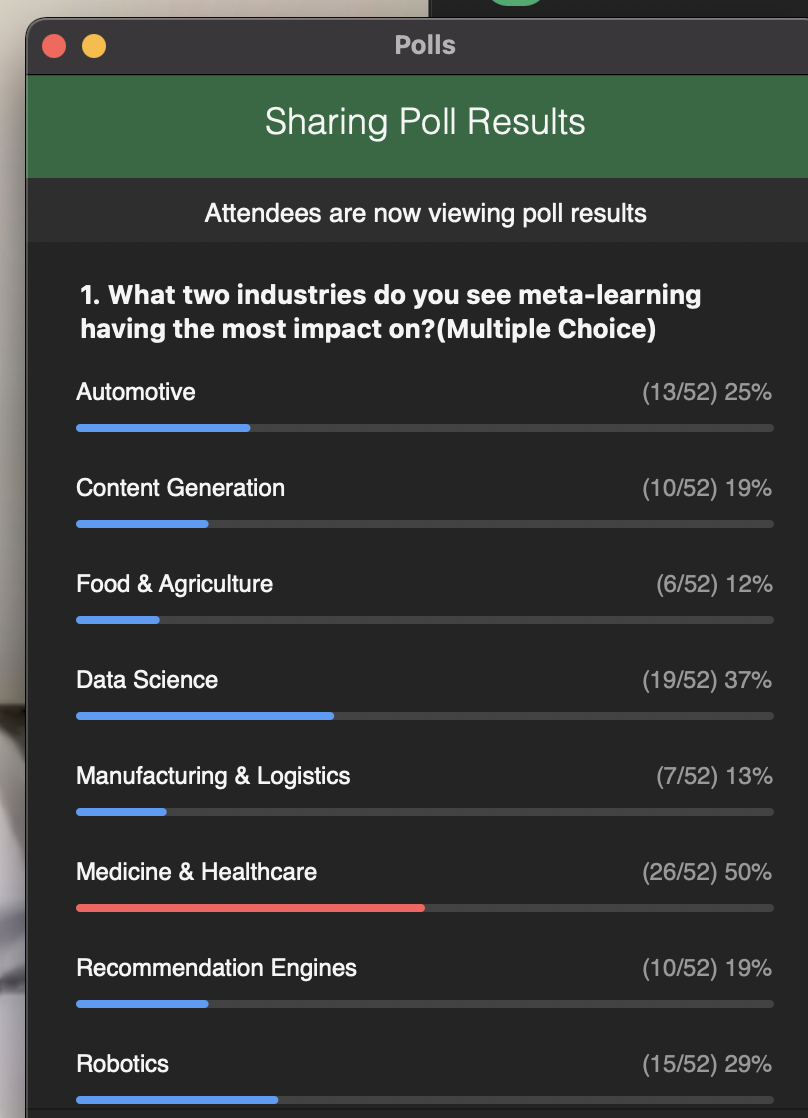

Abby: Talking about how those learnings have impacted the way that vaccine rollout has gone… Which industries or sectors in your opinion have the most opportunity to leverage meta-learning now and in the future?

Jane: I think all of them.

Meta-learning is not a magic bullet, it’s just an idea that problems you want to apply your algorithm to have some structure and you can take advantage of that structure by learning it.

Attendees of our very first WLVT community event shared their opinion as well

Abby: Can you give me an example of a problem that you think is primed for a meta-learning solution?

Jane: I wouldn't necessarily say primed. Most technology companies today are looking at problems that could probably use some aspects of meta-learning, like self-driving cars, for instance. I'm not a self-driving car expert, but it seems like a lot of companies have handcrafted visual recognition systems or object segmentation systems and are trying to create hand-coded rules. Instead, you could have a self-supervised system that's teaching and bootstrapping itself to learn to better recognize things in the future as you give it more and more data. I think Tesla is doing something like this. You can always leverage more data.

A question from the audience | Dr. Carol Dersarkissian, a board-certified emergency physician: Can you think of a specific example where meta-learning could be incorporated into health care or treatment or management of patients?

Jane: The tricky thing about healthcare is that you want to be extremely careful. You can’t just collect the data, it’s not free. You don't want to be accidentally giving somebody the wrong medication and risking somebody's safety for that. You would need to think about ways of collecting data either in simulation or being able to leverage sparse data in a semi-supervised way.

With meta-learning, it takes longer to learn a learning process itself than to just learn a problem. It can be computationally expensive.

A question from the audience | Lilit Dallakyan, Research Manager & Associate at ML Labs / SmartGateVC: What critical issues can meta-learning help us solve in brain-computer interfacing? Do you think that hardware is more limiting, or do you think we need better software?

Jane: These are great questions that I hadn't thought about in terms of meta-learning before. With BCI, you need to train an algorithm almost for each person because you need to be able to translate between brain activity and whatever representation that you're trying to get out of it. If somebody is trying to move a cursor with their mind, then you need to have a lot of training iterations with this person to be able to properly label these different representations. They usually tend to be individual. If only there was a way of capturing structure across people... If you tend to have this representation for moving a cursor, then you will also have this representation for moving a coffee cup or doing some other movement.

Abby: It seems like it’s a good idea for startups and established companies in the BCI space: to become more efficient at scale, they can start to develop their algorithms by thinking about it from a meta-learning approach.

Jane: You can imagine some kind of system which is figuring out for each person how to optimize the way that it collects the training data so it minimizes the amount of time it takes somebody that needs to sit there and provide a lot of examples.

Abby: That's maybe the biggest opportunity there is: to limit, to be able to do things with a smaller amount of data than we currently need. How far are we in your mind from seeing AI truly learn to learn and transform aspects of our world in desirable ways?

Jane: Those are two separate questions. We already have algorithms that can learn to learn and can meta-learn. There are limitations with learning to learn and meta-learning though.

If you try to train your system on something that it's never seen before, it's hard for it to do something smart in that case or to be able to generalize and to do transfer learning. That's why the AI algorithms that we have can't do creative problem-solving. They can't come up with some insights on how to deal with a new problem that they’ve never seen before. If you present an algorithm with new data we would expect it to do something nonsensical.

Abby: That's one of the things that you'd taken on in that blog post and this idea of it being conceivably indistinguishable from magic is that creative element. An algorithm that could generate a song by listening to you humming it a few notes. Or you can give it a couple of bullet points, and it can write out the content piece. Do you think that this is in our future? How close is it?

Jane: I think it could be. I have no idea how close this future is because it almost feels like that there is some kind of step function in our future, that there is some bit of insight that hasn't been taken yet, or that we haven't taken certain steps.

The kinds of programs that we have now, AI algorithms, don't have the ability to replace a human. A human is still going to be the best at being able to generate creative, interesting stories and to be able to compose a unique song. We have generative models right now but they sound like they are remixes of all their training data.

If you look at those image GANs, they're all perfectly fine people, but they look like a mixture of a bunch of people that you've seen. Anytime that we see an algorithm that can do some creative design that makes sense, and that isn't a mismatch, a mashup of what it'd seen before, then I will say that maybe this magic is close.

Abby: Has there been a moment in your career so far that you would consider your most defining event?

Jane: There wasn't a single defining event, but there was a defining period of my life, towards the beginning of my post-doc, where I didn't know what I wanted to do with my future, with my career. I had just gotten a Ph.D. in applied physics and computational neuroscience, but I was a little bit lost.

There was something in the Zeitgeist - this was around the time that AlexNet made its breakthrough in the ImageNet Competition in 2012, although I wasn't consciously aware of this. But around this time, I became obsessed with artificial intelligence. It might have been influenced by science fiction books I was reading. I wanted to figure out how to program the brain. Everything became a lot clearer after that. I was like, "Okay, I need a background in neuroscience." And then, "Now I need to get a much better technical background." I somehow found myself at DeepMind after that.

Abby: Given your interest and your studies, it seems like solving intelligence and then having intelligence that can solve everything else was a natural next step for you. For people in the audience who are interested in taking a similar route or interested in DeepMind, were you recruited by them, or did you apply? Do you have a recommendation for them on how to catch the interest of the DeepMind team or perform well in interviews?

Jane: The path that I took is not necessarily applicable now because I applied for the role when they were very early. They hadn't had their first paper come out yet.

I sent them an email with a cover letter. I wound up not hearing back for about three months. That email went to my spam. I almost missed the recruiting email inviting me for an interview. One piece of advice I would give you: always check your spam.

Going back to what I was talking about earlier: it’s important to know what it is that you're passionate about. What question drives you? Then you can figure out how to get there. Don't worry about departments, papers, your education, or things that you think are going to hold you back. Just try to figure out, "Well, what are the pieces that I need? What kind of knowledge do I need to acquire to be able to tackle this problem?" You position yourself in a place to be able to tackle that and people will see that you're ready.

Watch the full version of this interview below:

This event was supported by Silicon Valley Bank, M12 Microsoft’s Venture Fund, SPIE, Hubspot for Startups.

Hope you enjoyed this interview as much as we did. Taking into account all the amazing feedback that we received, we’ll all meet again soon and have long thoughtful conversations on topics we care deeply about. Stay tuned and keep an eye out for our next Vision event someday soon.